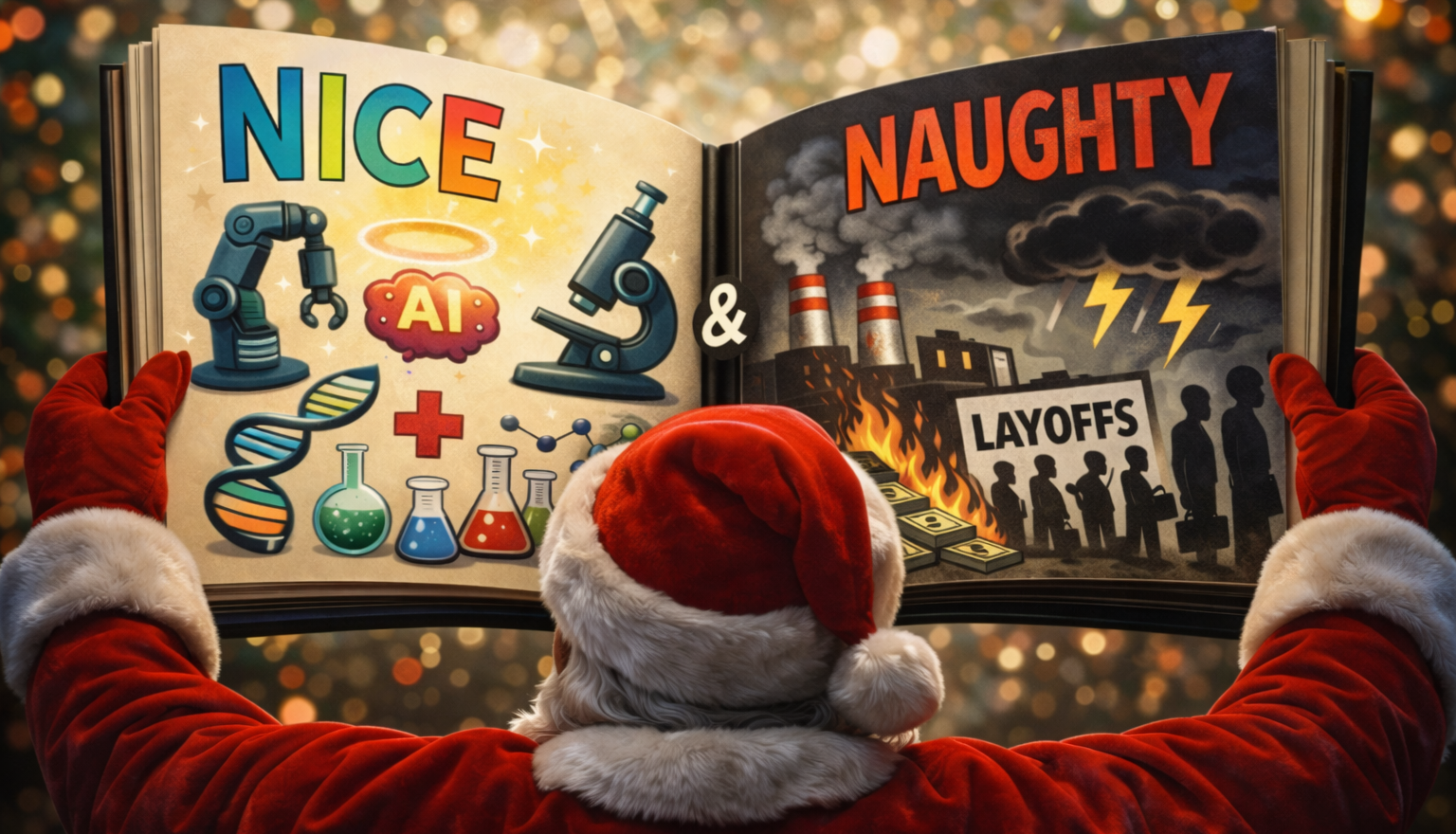

AI's Naughty and Nice List

Santa's been reviewing his AI industry ledger. He's confused.

Not because companies were naughty or nice. Because the same companies keep appearing on both lists. Sometimes in the same quarter.

That's the real story of 2025. Genuine scientific breakthroughs happened. AlphaFold 3 transformed protein interaction prediction, achieving 50% improvement over existing methods. AlphaGenome is unlocking what "junk DNA" actually does, analysing up to a million DNA letters at once. Claude's hybrid reasoning model is genuinely thoughtful engineering. These are real achievements.

But sitting right next to them? A company that loses $3 for every $1 it earns valued at $500 billion. Job creation maths that don't survive contact with reality. Tech giants rolling back climate commitments because AI's power bill exceeded their sustainability budgets.

The contradictions are the story. Here are the four that defined the year.

The Business Model Contradiction

AI stocks account for 75% of S&P 500 returns since ChatGPT launched, according to JP Morgan. Nvidia hit a $5 trillion valuation, becoming the first company to reach that milestone. More than half of all global VC funding now flows into AI, a record $192.7 billion in 2025 alone.

Nvidia's business model is solid. They're selling picks and shovels in a gold rush. But the companies buying those picks? The LLM providers? Nobody has figured out how to make that work yet.

OpenAI in H1 2025: $4.3 billion in revenue, $13.5 billion in net losses. They're losing roughly three times what they earn. HSBC projects they won't be profitable until after 2030 and will need another $207 billion in funding even to get there. Only 5% of ChatGPT's 800 million users pay anything.

The economics are circular. Nvidia provides chips to OpenAI. OpenAI pays Nvidia. OpenAI and Nvidia announced a major new tie-up, with OpenAI purchasing billions in chips in exchange for a $100 billion investment from Nvidia.

Here's what I see on the ground. That 88% "enterprise AI engagement" stat gets thrown around a lot. In practice, most of it means "we bought some ChatGPT Enterprise licenses" or "we ran a few pilots." Real integration into production workflows? Much rarer. The gap between "we're using AI" and "AI is transforming our operations" is enormous.

Revenue growth subsidised by investor faith isn't a business model. It's a bet that unit economics will eventually work. That bet may pay off. But right now, we've collectively valued that bet at trillions.

The Jobs Contradiction

The industry loves this framing. According to the World Economic Forum:

- 97 million new jobs created by AI

- 85 million existing jobs displaced

- Net: +12 million jobs

Sounds reasonable. Until you look at the details.

77% of new AI jobs require master's degrees. The jobs being displaced are customer service, data entry, retail. Entry-level positions accessible to anyone with basic qualifications.

So we're automating away £28K/year jobs and replacing them with £95K+ positions requiring advanced degrees. The workers losing jobs have zero chance of accessing the "replacement" jobs. Different skills. Different education. Different geography.

That's not a transition. It's a bait and switch dressed up as progress.

And the timeline keeps accelerating. Originally major disruption was predicted for the 2030s. Then early 2030s. Now 2027-2028. 41% of employers plan to reduce their workforce in the next five years as AI automates certain tasks, according to the World Economic Forum's 2025 Future of Jobs Report. This isn't speculative anymore.

There's no coordinated plan to manage this. No retraining infrastructure at scale. No safety net redesign. Just confident predictions that the market will sort it out.

The market has never sorted out a transition this fast.

The Environment Contradiction

This one's almost funny. Almost.

AI applied to power, transport, and food systems could reduce global emissions by 3.2 to 5.4 billion tonnes annually by 2035, according to research from the Grantham Institute at LSE. Optimising supply chains. Improving grid efficiency. Accelerating materials science for batteries and carbon capture.

Instead, AI systems could produce 32 to 80 million tonnes of CO₂ in 2025, according to new research in Joule. The upper estimate is equivalent to New York City's annual emissions. AI's water footprint could reach 312.5 to 764.6 billion litres in 2025, matching the global bottled water industry.

Here's the punchline. Google's greenhouse gas emissions rose 48% since 2019, largely due to AI-driven data centre expansion. Microsoft's emissions grew 29% since 2020. Both companies have significantly scaled back their carbon neutrality commitments as AI demands continue escalating.

We built a technology that could help address climate change. We're currently using it to make climate change worse.

The rationalisations are impressive. "AI will eventually solve climate change, so the current emissions are worth it." That's like burning down a forest to fund reforestation research.

The Democratisation Contradiction

DeepSeek was supposed to be the good news story.

Trained for one-tenth the cost of Western models. Competitive performance. Open-source MIT licensing. Finally, AI that wasn't gatekept by a handful of trillion-dollar companies.

Then researchers at Wiz discovered a publicly accessible database exposing "a significant volume of chat history, backend data, log streams, API secrets, and operational details." Italy's data protection authority banned DeepSeek over privacy concerns. The Pentagon blocked access. NASA banned employees from using it. Taiwan cited national security risks.

The pattern is instructive. Democratisation sounds great until you examine who's doing the democratising and why.

DeepSeek's data storage in China under Chinese law is documented. But here's the uncomfortable question: are Claude, GPT-4o, and Gemini similarly encoding unstated values? Nobody's fully transparent about training data, decision-making processes, or value alignment.

We're building increasingly powerful systems. We're not building the transparency mechanisms to understand what values they carry.

What This Means for 2026

The real question for next year isn't "will AI advance?" That's guaranteed. The models will get better. The capabilities will expand. Someone will announce something that sounds transformative.

The question is whether the contradictions catch up first.

Can the economics sustain another year of trillion-dollar bets on companies that lose money on every transaction? Can the job transition promises survive contact with actual displaced workers? Can the environmental costs stay hidden in corporate sustainability reports?

2025 gave us genuine breakthroughs wrapped in genuine problems. Same companies. Same quarter. Sometimes same product.

Santa's verdict? The AI industry isn't naughty or nice. It's both. All the time. And until that changes, the ledger stays messy.

That's it for 2025. Thanks for reading, for subscribing, and for the conversations that make this worth writing. Whether you're taking a proper break or sneaking in some side project time between Christmas dinner and New Year's Eve, I hope you get some rest. See you in January with fresh contradictions to untangle.

Happy Christmas. 🎄

No spam, no sharing to third party. Only you and me.

Member discussion