Cargo Cult Vibe Coding

In the age of AI code generation, we risk trading craftsmanship for ritual

It's the 1940s in the South Pacific. During World War II, military forces built airstrips on remote islands in Melanesia. Planes would land, and suddenly these isolated communities saw an influx of goods. Canned food, tools, medicine, clothing. Then the war ended and the planes stopped coming.

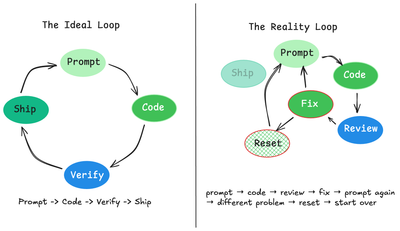

The islanders had observed everything. So they built their own runways out of bamboo. They carved headphones from wood and wore them like the radio operators. They waved landing signals with sticks. They performed all the rituals they'd witnessed.

The planes never came.

They had copied the form but missed the substance. The appearance without the understanding. The ritual without the reason.

From Islands to IDEs

In modern software development, you see the same pattern. Developers copying and pasting code from Stack Overflow without understanding what it does. Teams enforcing SOLID principles in a 200-line script that doesn't need them. Companies implementing microservices because Netflix does it, even though they're solving completely different problems at a completely different scale.

Subscribe to continue reading