AI Agent Use Case Evaluation: From Risk Assessment to Implementation

When I first started evaluating Agentforce implementations, I made the classic engineer's mistake: jumping straight into technical capabilities and features. It took several failed proofs-of-concept and a particularly memorable conversation with a frustrated CTO to realise I was approaching it all wrong.

"Anyone can build a cool demo with foundation models," she told me bluntly. "I need to know if this will keep us in business in five years."

That conversation triggered a fundamental shift in my thinking. I started mapping out the real questions we should be asking before even touching the technology. The framework below emerged from countless whiteboard sessions, failed attempts, and successful implementations across UKI customers and beyond.

Risk Assessment: The Foundation of Everything

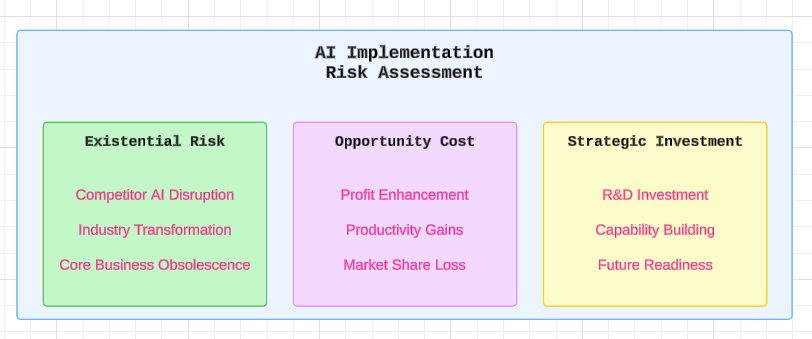

The first and crucial step is understanding where on the risk spectrum your use case falls. I've developed this risk hierarchy based on both the 2023 Gartner study and the "GPTs are GPTs" OpenAI research:

Level 1: Existential Risk (High Priority)

When evaluating a use case, I first ask: "Could AI make this business obsolete?" If yes, this automatically becomes a high-priority implementation.

Example Cases:

Document processing firms facing AI disruption

Financial analysis services

Traditional insurance claims processing

Creative agencies doing copywriting and design

When I talked to a London-based document processing firm that was losing 30% of their business to AI-powered competitors, they stated emphatically that it wasn't just about efficiency anymore—it was survival.

Level 2: Opportunity Cost (Medium Priority)

If the business isn't at existential risk, I evaluate the opportunity cost of not implementing AI.

Key Questions I Ask:

Can AI significantly reduce operational costs?

Will it provide competitive advantages in:

Customer acquisition

User retention

Service delivery

Internal operations

Level 3: Strategic Investment (Lower Priority)

For cases where neither existential risk nor immediate opportunity cost is clear, I evaluate the strategic value.

Considerations:

R&D potential

Future market positioning

Capability building

Technological readiness

Strategic Implementation Priorities

After identifying the risk level, I learned that successful Agentforce implementations depend on matching the implementation approach to the business urgency and capability requirements.

Here's how I map implementation priorities based on risk levels:

Existential Risk Response

Timeline: Immediate (3-6 months)

Focus: Core business process transformation

Resource Allocation:

Development Team: 70-80% of technical staff

Budget: Majority of AI development budget

Sprint Priority: Highest priority in development sprints

Example: Full claims processing automation for insurance firms

Success Metrics: Market share retention, process automation rate

Opportunity-Driven Implementation

Timeline: Medium-term (6-12 months)

Focus: Revenue and efficiency enhancement

Resource Allocation:

Development Team: 40-60% of technical staff

Budget: Moderate portion of AI development budget

Sprint Priority: Medium priority in development sprints

Example: Customer service automation and enhancement

Success Metrics: Cost reduction, customer satisfaction scores

Strategic Investment Approach

Timeline: Long-term (12-18 months)

Focus: Capability building and experimentation

Resource Allocation:

Development Team: 20-30% of technical staff

Budget: Exploratory budget allocation

Sprint Priority: Lower priority in development sprints

Example: R&D projects for future service offerings

Success Metrics: New capability development, market positioning

This prioritisation framework emerged after seeing several clients struggle with trying to do everything at once. By tiering implementation based on risk levels, we could focus resources where they mattered most while still maintaining progress on lower-priority initiatives.

The approach above emerged from a critical realisation: successful Agentforce implementations aren't just about the agents—they're about the entire ecosystem.

Successful Agentforce implementations aren't just about the agents—they're about the entire ecosystem.

Build vs. Buy: The Agentforce Advantage

Let me be blunt: the traditional build vs. buy dichotomy doesn't quite apply with Agentforce. After implementing dozens of solutions, I've developed strong opinions about this.

The Agentforce platform fundamentally changes the build vs. buy equation. Here's why:

When to Build Custom with Agentforce

Existential Risk + Core Process: If AI threatens your core business and the process is central to your operations, you absolutely must build custom with Agentforce. I've seen financial services firms try to cut corners here with pre-built solutions, only to find themselves scrambling six months later when they needed deeper customisation.

High Technical Expertise + Complex Requirements: With strong technical teams and unique requirements, custom Agentforce development gives you the control and flexibility you need.

When to Use Pre-built Agentforce Skills

Standard Processes: For common business processes like customer support or lead qualification, Agentforce's pre-built skills are not just adequate – they're often superior to custom solutions. They're battle-tested and continuously improved.

Limited Technical Resources: Don't have a team of AI engineers? Start with pre-built skills. They're designed to be configurable without deep technical expertise.

The Hybrid Sweet Spot

Most organisations should aim for what I call the "Hybrid Sweet Spot":

Use pre-built skills for standard processes

Build custom agents for core differentiators

Leverage Agentforce's orchestration to connect them

Unlike traditional build vs. buy decisions, Agentforce's modular architecture means you're never locked into one approach. Start with pre-built skills, then gradually customise as you learn what matters most for your business.

Lessons Learned

Don't Chase the Hype: After seeing several clients rush into AI implementations because of FOMO (Fear of Missing Out), I now start every evaluation with the question: "What happens if you don't do this?"

Start with Risk, Not Technology: The most successful implementations were those where we thoroughly understood the risk level before discussing technical solutions.

Build for Survival First: If AI poses an existential threat to your business, that must drive both the priority and approach of your implementation.

Looking Forward: The Evolution of Use Case Evaluation

As I continue to refine this framework, I'm increasingly convinced that the key to successful AI implementation isn't in the technology itself—it's in understanding the business context and risk landscape. Just as Kodak, Blockbuster, and BlackBerry learned too late, the existential threat of technological disruption should be the primary driver of implementation decisions.