From ML to AI Engineering: Transforming How We Build AI Applications

a not so subtle paradigm shift

As technology evolves, so do the roles of engineers shaping its future. Machine Learning (ML) engineering has long been a cornerstone of AI innovation. However, the rise of foundation models marks the beginning of a new paradigm: AI Engineering. This shift is more than just a buzzword—it represents a fundamental change in how we build and deploy AI applications. This transformation is best understood through real-world scenarios that highlight the differences between ML engineering and AI engineering.

Scenario 1: Building a Fraud Detection System

Imagine you're tasked with creating a fraud detection system for a financial institution. As an ML engineer, you would:

Gather Data: Collect labeled datasets of fraudulent and non-fraudulent transactions.

Train a Model: Use algorithms like decision trees or neural networks to classify transactions.

Deploy the Model: Integrate the trained model into the system, ensuring it performs efficiently in production.

Iterate: Continuously retrain the model with new data to improve accuracy.

Now consider the same problem approached from an AI engineering perspective using a foundation model like GPT. Here, you'd:

Select a Pretrained Model: Choose a model already trained on vast datasets.

Adapt the Model: Use prompt engineering or fine-tuning to specialize the model for fraud detection tasks.

Integrate Context: Design prompts that include transaction details and user history for more accurate assessments.

Deploy and Evaluate: Focus on building user interfaces and evaluating open-ended outputs to ensure reliability and ethical behaviour.

The difference is striking: ML engineering focuses on model creation and training, while AI engineering prioritises adapting existing models and integrating contextual information.

Scenario 2: Developing a Conversational AI Chatbot

Let's say you're building a chatbot for customer support. In the ML engineering workflow, you might:

Gather Data: Compile a dataset of customer inquiries and responses.

Train a Model: Train a sequence-to-sequence model or a transformer to generate responses.

Evaluate Performance: Compare model outputs against a predefined set of correct answers.

Deploy the Chatbot: Integrate it into a chat interface.

With AI engineering, the process looks different:

Select a Foundation Model: Start with a large language model like GPT-4.

Use Prompt Engineering: Design prompts that guide the model to generate accurate and helpful responses without retraining.

Handle Open-Ended Outputs: Develop evaluation metrics for responses that don't have a single "right" answer.

Create an Interface: Build the chatbot's user-facing platform and ensure seamless integration with tools like Slack or WhatsApp.

Here, AI engineering leverages the model's pre-trained capabilities, focusing on user experience and evaluation of flexible outputs.

Scenario 3: Scaling AI Applications

Suppose you're scaling an AI application to handle millions of users. An ML engineer would concentrate on:

Inference Optimisation: Reducing latency and cost by optimising model architectures and compute resources.

Model Retraining: Periodically retraining the model to accommodate new data.

Monitoring and Feedback: Setting up systems to monitor performance and gather user feedback.

For an AI engineer, scaling involves:

Optimising Foundation Models: Balancing quality, cost, and latency through fine-tuning or inference optimisation.

Contextual Adaptation: Implementing retrieval-augmented generation to provide the model with dynamic context at runtime.

Monitoring Open-Ended Outputs: Developing robust evaluation pipelines to ensure consistent and reliable performance.

AI engineering's focus on open-ended models introduces new complexities, particularly in designing effective evaluation and feedback mechanisms.

Key Takeaways

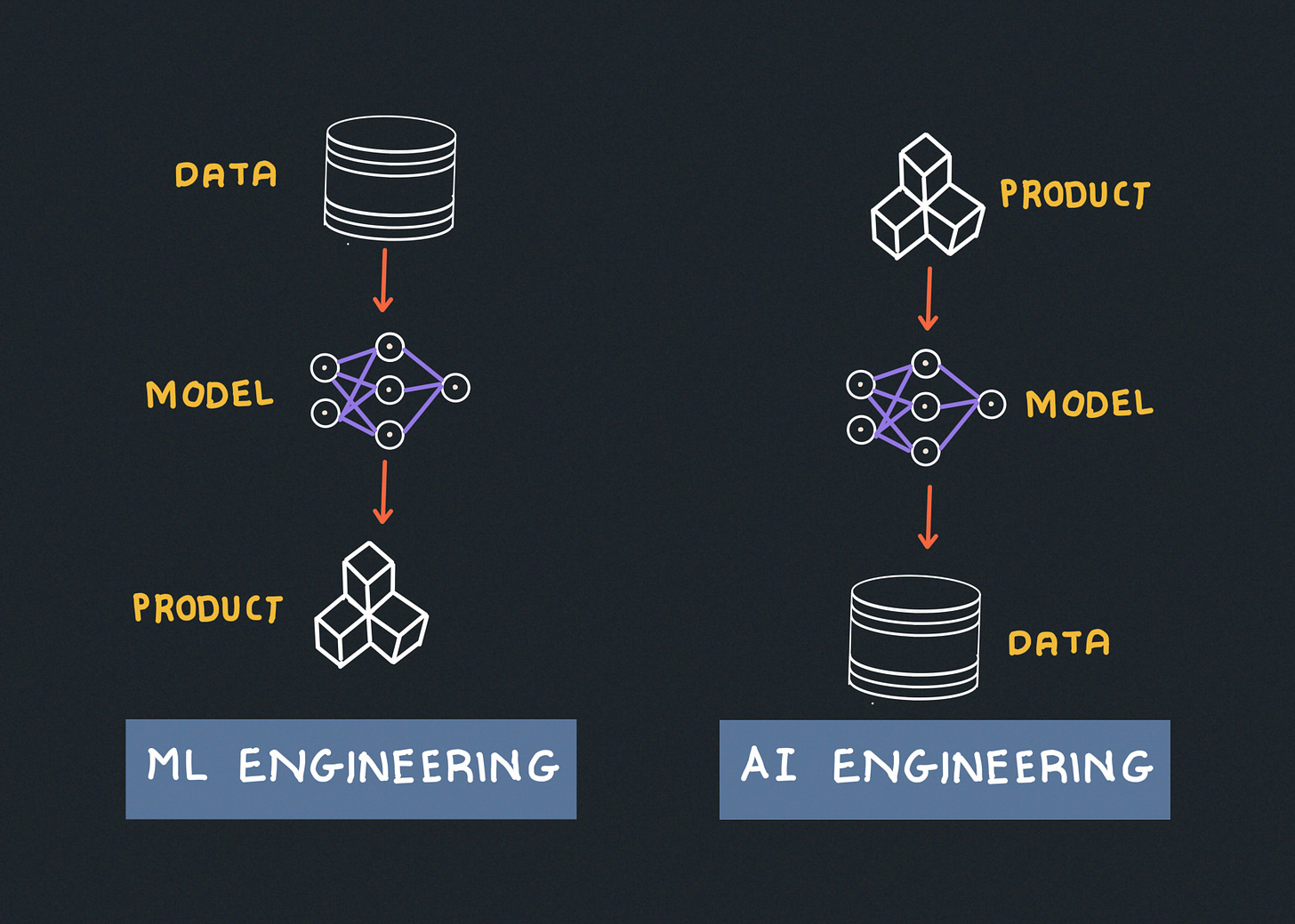

ML Engineering Path:

Data collection

Model development

Product development (last step)

AI Engineering Path:

Product development (first step)

Context and prompt design

Model adaptation when needed

This represents perhaps the biggest shift: AI engineers can start with the user experience and work backwards, rather than being constrained by data and model limitations up front.

Foundation Models Shift the Focus: AI engineering prioritises adapting and evaluating pre-trained models over creating new ones.

Open-Ended Outputs Require New Approaches: Unlike close-ended ML tasks, AI engineering must tackle the challenges of evaluating flexible, creative outputs.

Product-First Philosophy: AI engineering often starts with building a product, while ML engineering begins with data and model development.

Looking Forward: The Art of AI Engineering

The skills that made great ML engineers haven't become irrelevant—they've transformed. Today's AI engineer is less like a model builder and more like a conductor, coordinating powerful but complex systems to create something meaningful.

The most successful AI engineers understand this paradox: having more powerful models doesn't simplify engineering—it shifts the challenges toward effective adaptation, integration, and evaluation. Instead of spending months gathering data and training models, they focus on the artistry of proper model adaptation, prompt design, and system integration.

As foundation models continue to evolve, this pattern will likely intensify. The future belongs to engineers who can balance the raw power of these models with the practical constraints of real-world applications.