RAG at Scale: What It Takes To Serve 10,000 Queries A Day

Most teams start with a simple RAG prototype. It feels elegant, almost magical. A vector database, a handful of chunks, a clean loop that retrieves and generates answers. It works beautifully for demos and internal tools. Then you ship it to real users and everything changes. Latency creeps up. Costs balloon. Hallucinations slip through. Cold starts make terrible first impressions.

None of this is a surprise. Naive RAG was never built for production traffic.

This is about what naive RAG actually does, why it falls apart under load, what advanced RAG adds, and the engineering work that makes a system stable at ten thousand queries a day.

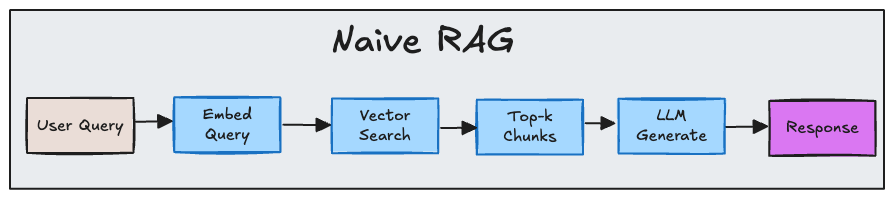

What naive RAG actually is

At its core, naive RAG is a four-step loop. Embed the query. Run similarity search. Take the top chunks. Send them directly to the model. It's straightforward, but brittle.

It breaks because:

- Chunk boundaries are often poor, so the model sees fragments rather than full ideas

- Dense similarity alone retrieves irrelevant content

- Multi-hop questions fall apart because retrieval is shallow

- No metadata filters, no time awareness, no permissions logic

- The model can't request additional retrieval when the initial pass is weak

Naive RAG is fine for demos or hackathons. It’s not fine for systems under load (10,000+ queries per day).

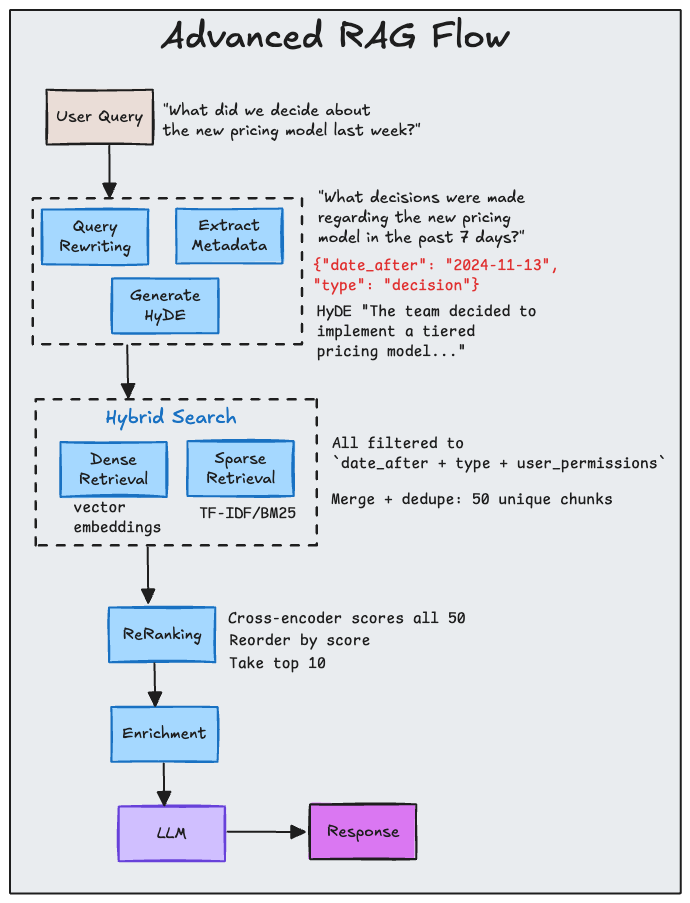

What advanced RAG adds

Advanced RAG strengthens retrieval before the model generates anything. It doesn't rely on a single vector search call. It layers techniques that compensate for the structural weaknesses in naive RAG.

Key upgrades:

Query rewriting clarifies or decomposes questions. When a user asks "What did the CEO say about it?", the system rewrites to "What did the CEO say about Q3 earnings guidance?" before retrieval begins.

Hypothetical Document Embedding (HyDE) generates a fake answer first, then uses its embedding for similarity search. This often retrieves better chunks than the original query would.

Hybrid search blends dense retrieval with sparse keyword matching. Vector similarity finds semantically related content. BM25 catches exact terms that embedding models miss.

Metadata filtering extracts constraints before searching. "Last month" becomes a date filter. "In the presentation" filters to .pptx files. User context restricts results to permitted documents.

Reranking uses a cross-encoder to score each query-chunk pair and select the top N. This step trades speed for precision.

Context enrichment makes retrieved chunks useful. Parent chunks, summary chunks, and sliding windows transform fragments into something the model can reason over.

This tightens retrieval quality. But better retrieval doesn't guarantee a production-ready system.

What breaks at scale

Advanced RAG solves the quality problem. It doesn't solve the systems problem. When you reach a hundred concurrent users, four things start failing in predictable order: latency, cost, cold starts, and trust.

Latency

Sequential execution becomes the choke point. Embeddings block vector search. Vector search blocks reranking. Everything blocks the model call. Response times drift into three to five seconds even when nothing is technically broken.

The fixes are architectural. Retrieval should be asynchronous and pipelined. Responses should stream to reduce perceived latency. Data sources should be queried in parallel. Vector database connections should be pooled. Hot chunks should be cached so repeat queries skip the vector store entirely.

Cost

Naive RAG is deceptively expensive. A thousand daily queries adds up. Enterprise workloads magnify the problem.

Most cost is wasted work. Semantic caching avoids recomputing answers that resemble each other. Smarter chunk sizing avoids stuffing the context window with noise. Fewer retrieved chunks, combined with a strong reranker, reduce token use. Per-endpoint cost tracking exposes patterns that would otherwise stay invisible.

A production system treats every unnecessary call as a liability.

Cold starts

Cold starts hit when your system initialises from scratch after a deployment, restart, or document ingestion. The first request pays the full cost of loading models, warming connections, and computing embeddings whilst subsequent requests benefit from caches and hot services.

There are practical ways around this. Precompute embeddings for common query shapes. Cache embeddings for boilerplate questions rather than computing fresh ones each time. Warm the system after deployment to prime caches. Serve partial results whilst ingestion continues in the background. Keep embedding services warm so they don't spin up each time.

Cold starts disproportionately shape user perception. Fixing them pays immediate UX dividends.

Trust

RAG reduces hallucinations, but it doesn't eliminate them. Retrieval gaps and ambiguous questions create space for fabrication.

A robust system layers defences. Citation tracking ties each sentence to actual chunks. Relevance scores help the model calibrate confidence. Generated answers can be re-queried to verify retrieval coverage. Simple contradiction checks catch internal inconsistencies. Downvoted answers get logged for pattern analysis rather than dismissed as one-off failures.

Good hallucination control is a feedback discipline, not a switch you flip.

The real lesson

Naive RAG gets you a demo. Advanced RAG gets you better retrieval. But neither is enough for real traffic. Production RAG is an engineering problem. The plumbing matters more than the prompt.

If you want a system that stays steady at ten thousand queries a day, the gains come from architecture, caching, concurrency, and operational discipline. RAG isn't an LLM trick. It's a systems problem wrapped around a language model.

What's been your experience scaling RAG in production? What broke first in your system? I'd love to hear what surprised you.

No spam, no sharing to third party. Only you and me.

Member discussion