The Context Advantage

We need to talk about the "vibes."

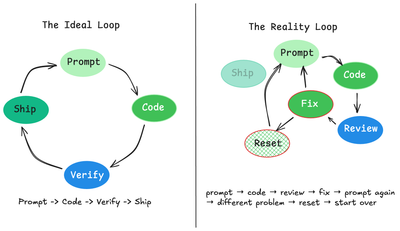

If you browse Twitter/X, AI coding looks like magic. You type a prompt, a cursor flies across the screen, and an app appears. It feels fast. It feels effortless.

But if you actually manage a production codebase, you know the reality is different. We are entering the era of "Slop" code that looks correct at a glance, passes the linter, but is subtly broken, unmaintainable, or architecturally incoherent.

"Vibe Coding" the act of chatting with an LLM until the code works is a dead end for professional engineering. It works for prototypes, but it destroys production systems.

The Problem: The Slop Curve

Everyone feels faster with AI. But "feeling" faster and shipping reliable software are two different metrics.

Recent data confirms what many of us have suspected. The 100,000 Developer Study by Yegor Denisov-Blanch (Stanford) paints a stark picture of the "Slop Curve"

- Greenfield Projects (New Repos) AI assistants provide a massive productivity boost (~20%+). When there is no existing code to break, AI is a force multiplier.

- Brownfield Projects (Legacy/Complex Repos) The gains flatten or turn negative.

This is because LLMs struggle with implicit context. In a complex system, the "correct" solution often involves refactoring existing patterns, not just gluing on new functions. AI defaults to addition because it’s safer.

The result is technical debt at hyper-speed. You save 10 minutes typing the code, but you lose 10 hours later in "churn" debugging obscure edge cases and reworking bad architecture that the AI introduced because it didn't understand the system's constraints.

The Science: The "Dumb Zone"

The failure mode of Vibe Coding isn't just about the model's intelligence; it's about Context Saturation.

LLMs are stateless. They only know what exists in their current context window. When developers get lazy, they dump entire file trees or massive JSON objects into the chat to "give the AI context."

This triggers a phenomenon known as "Lost in the Middle".

As you fill a context window past the ~40-60% mark, the model’s reasoning capabilities degrade. Dex (from HumanLayer) calls this the "Dumb Zone." In this zone, the model starts hallucinating libraries, forgetting established constraints, and missing obvious bugs.

Worse, you create a Poisoned Trajectory.

If your chat history contains five failed attempts, error logs, and you yelling "fix it," the model is statistically more likely to fail again. It is pattern-matching against a history of failure. Vibe Coding encourages you to spiral; Context Engineering forces you to stop.

The Fix: Context Engineering & The RPI Workflow

The answer isn't to stop using AI. It's to stop treating it like a chatbot and start treating it like a component in a system. We need Context Engineering the skill of curating exactly what the model sees to keep it in the "Smart Zone."

This requires a move to Intentional Compaction. Instead of a long, winding chat history, we must periodically summarise the current state into a single Markdown file a "Snapshot of Truth" and feed that into a fresh, empty context window.

This philosophy powers the RPI Workflow (Research, Plan, Implement), a structured loop that prevents Slop.

Phase 1: Research

The agent does not write code. It reads. It explores the codebase to understand the architecture, dependencies, and existing patterns. It builds a map of the territory without the pressure to change it.

Phase 2: Plan

The agent writes a detailed specification. This includes pseudo-code, file paths, and testing strategies.

- The Checkpoint: This is where the human enters. You review the PLAN, not the code. If the plan is hallucinated or architecturally unsound, you kill it here.

Phase 3: Implement

The agent executes the plan in a fresh context window. It doesn't need to "think" deeply anymore; it just follows the instructions derived from the pristine context of Phase 1 and 2.

Closing the Skill Rift

We are currently seeing a dangerous "Skill Rift" in engineering teams.

- Juniors love AI because it lets them punch above their weight. They generate massive PRs full of code they barely understand.

- Seniors hate AI because they have to review those massive PRs. They are drowning in Slop.

Reviewing 500 lines of AI-generated code is impossible; your eyes glaze over. But reviewing a 50-line AI-generated Plan is high-leverage.

By shifting the review process left to the planning phase seniors can align the agent’s trajectory with the system’s architecture before a single line of code is written. This is Mental Alignment.

What You Should Do Today

If you are just "chatting" with your code, you are destined to drown in Slop. The future belongs to engineers who can manage state, control trajectory, and curate context.

Actionable steps for your team

- Ban Vibe Coding for Features Chat is for questions; workflows are for code.

- No Plan, No PR Refuse to review AI-generated code unless it comes with an AI-generated Plan that was approved before implementation.

- Reset Context Often If an agent gets confused, do not argue with it. Wipe the context, feed it a summary, and start fresh.

Stop vibing. Start engineering.

No spam, no sharing to third party. Only you and me.

Member discussion