TIL: How Transformers work

If you want to actually understand transformers, this guide nails it. I've read a bunch of explanations and this one finally made the pieces fit together.

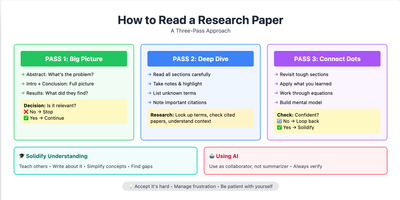

The thing that works is it doesn't just throw the architecture at you. It shows you the whole messy history first. RNNs couldn't remember long sequences. LSTMs tried to fix that but got painfully slow. CNNs were faster but couldn't hold context. Then Google Brain basically said "screw it, let's bin recurrence completely and just use attention." That's how we got the famous paper. Once you see that chain of failures and fixes, transformers stop being this weird abstract thing. You get why masked attention exists, why residual connections matter, why positional encodings had to be added. It all clicks because you see what problem each bit solves.

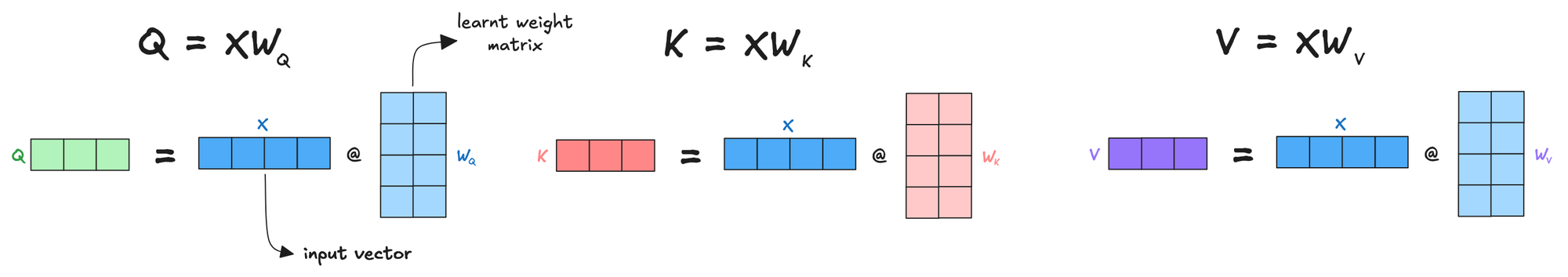

The hand-drawn illustrations help too. There's a Google search analogy for queries, keys, and values that made way more sense than the maths notation ever did. And the water pressure metaphor for residual connections actually stuck with me. It took the author months to research and draw everything. You can tell because it doesn't feel rushed or surface-level. If you've been putting this off because most explanations either skim over details or drown you in equations, this one gets the balance right.

https://www.krupadave.com/articles/everything-about-transformers?x=v3

No spam, no sharing to third party. Only you and me.

Member discussion