TIL: Learning New Tech With AI Assistance Might Backfire

I read a paper from Anthropic this week that I keep coming back to, mostly because it describes exactly how I've been using AI and suggests I might be sabotaging myself.

It's a randomised experiment where 52 professional developers learned a new Python async library (Trio). Half got access to an AI coding assistant, half didn't. Both groups could use documentation and web search.

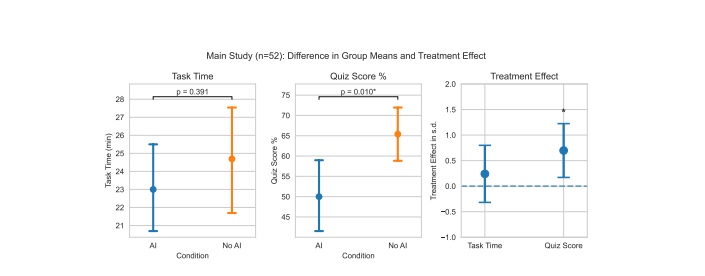

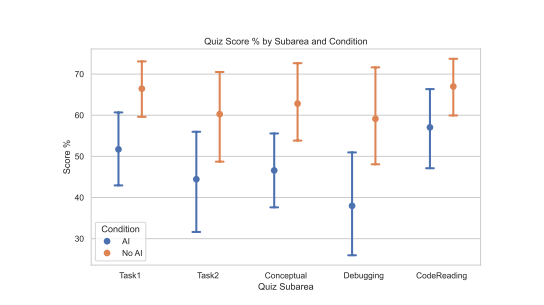

The AI group scored 17% worse on comprehension tests afterwards, about two grade points lower. What's strange is they weren't even faster on average. You'd expect a trade-off where maybe you learn less but ship quicker. But the average completion times were basically identical between groups.

Some participants spent up to 11 minutes figuring out what to ask the AI. The time saved on actual coding got burned on prompt wrangling. The only people who genuinely finished faster were the ones who just pasted whatever the AI gave them without thinking. Those same people had the lowest quiz scores.

The six patterns

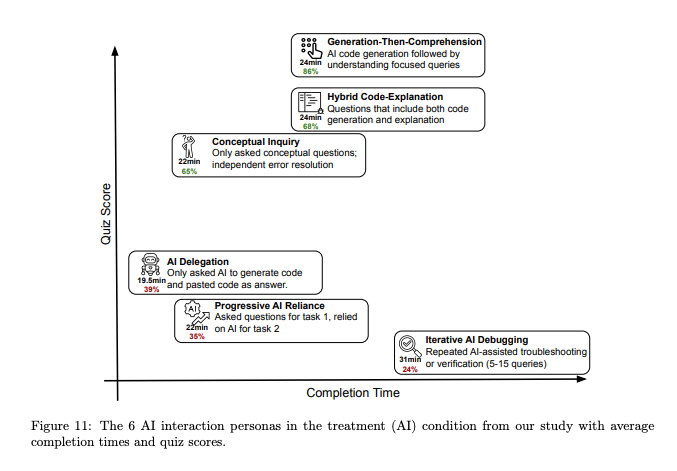

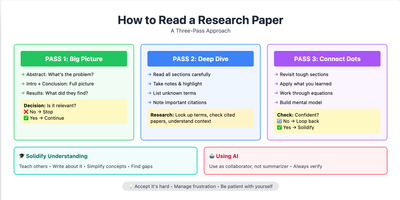

The researchers watched every screen recording and categorised how people actually used AI when learning something new.

Three patterns correlated with poor learning outcomes. AI Delegation is the obvious one, where you ask AI to write the code, paste it, and move on. Fastest completion time, lowest scores. Progressive Reliance is more insidious because you start out engaged, maybe ask a clarifying question or two, but gradually give up and let AI handle everything. You end up not learning the second half of the material at all. Then there's Iterative Debugging, where you keep feeding errors back to AI and asking it to fix things. You're technically interacting with AI a lot, but you're not building any mental model of what's going wrong.

Three patterns preserved learning even with AI access. Some participants practised Conceptual Inquiry, only asking AI conceptual questions and then writing the code themselves. They hit plenty of errors but worked through them independently. Others used Hybrid Code-Explanation, asking for an explanation of how the code works whenever they asked for code. It takes longer but you're actually processing what you receive. The most interesting one was Generation Then Comprehension, where participants got the code first but then followed up with questions about why it works. On the surface it looks like delegation, but the follow-up questions make all the difference.

Why the control group learned more

The control group hit about 3x more errors than the AI group, with a median of 3 errors versus 1. Working through TypeError exceptions and RuntimeWarnings forced them to actually understand the difference between passing a coroutine versus an async function, or when you need await versus start_soon.

The biggest score gap between groups was specifically on debugging questions.

This makes sense once you think about it. You can't get good at debugging if you never sit with broken code long enough to figure out why it's broken.

Participant feedback

From the AI group: "I feel like I got lazy" and "there are still a lot of gaps in my understanding" and "I wish I'd taken the time to understand the explanations from the AI a bit more."

From the control group: "This was fun" and "The programming tasks did a good job of helping me understand how Trio works."

The supervision problem

There's a circular issue here that the paper points out. As AI writes more production code, we need developers who can supervise it, catch bugs, understand failures, and verify correctness. But if those developers learned their craft with heavy AI assistance, they may never have built those verification skills in the first place. The people we're counting on to catch AI mistakes might be the least equipped to do so.

What I'm changing

I'm not going to stop using AI for coding. But for learning new libraries or frameworks, I'm going to be more deliberate.

When I hit an error, I'll resist the immediate impulse to paste it into Claude. I've noticed I do this almost reflexively now, and this paper suggests that reflex is costing me something. Working through the error is where the understanding comes from.

If I do ask AI to generate code, I'll follow up with questions about how it works before moving on. The "generation then comprehension" pattern seems to preserve most of the learning while still getting help.

For genuinely new concepts, I'll stick to asking conceptual questions rather than asking for implementations. Use AI to build understanding, not to skip past it.

The paper's final line stuck with me. "AI-enhanced productivity is not a shortcut to competence." Feels obvious when you say it out loud, but easy to forget when you're trying to ship something and just want the error to go away.

No spam, no sharing to third party. Only you and me.

Member discussion