Why AI Coding Advice Contradicts Itself

If you've tried to get better at AI-assisted coding, you've probably noticed something odd. The advice contradicts itself.

Not in a hand-wavy "it depends" way. Actual opposing recommendations from people who all claim success.

The Contradictions

➡ Keep prompts short vs write 500-word voice rambles

One school says the best results come from knowing exactly what you want and describing it in as few words as possible. More input equals lower quality. You're capped on compute, so shrink the problem space.

Another school swears by voice transcription. Dictate long, rambling prompts. Talk at 120 WPM instead of typing at 70. The model figures out what you mean. The verbosity is a feature, not a bug.

Both approaches work. For different people.

➡ Use planning mode vs planning mode lies to you

The official advice: use planning mode. Go back and forth until you like the plan. Review it. Then execute.

The problem: execution diverges from the plan. The model agrees to do X, then does Y. So you end up copying the plan into a document and executing it piece by piece, checking each step.

Now you're writing specs, reviewing AI-generated plans, chunking those plans, and monitoring execution. At some point you have to ask if this is faster than just writing the code.

➡ Maintain instruction files vs that's a red flag

Keep a CLAUDE.md or AGENTS.md file. Add context the model needs. Update it as you learn what trips it up.

Some people update these files multiple times a week.

But think about what that means. You're constantly discovering new ways the model misunderstands you. Each update patches another failure mode. That's not documentation. That's fighting your tools.

The counter-argument: it's like onboarding docs for a new engineer. Except onboarding docs don't need updates every few days. Your code conventions aren't changing that fast.

➡ Reset context constantly vs preserve conversation history

Clear your context window often. The model is freshest with a clean slate. Auto-compaction degrades quality. Start fresh when things go sideways.

But also: use iterative refinement. Have the model write code, talk through changes, ask for refactors. The conversation history IS the context. Reset and you lose it.

➡ Break tasks into tiny steps vs just write the code yourself

The micromanagement approach: break everything into small tasks. Ask for a plan. Review. Execute one step. Review. Course correct. Repeat.

But if you're doing all that work, you're figuring out the breakdown, reviewing every output, catching mistakes. Why not just type the code?

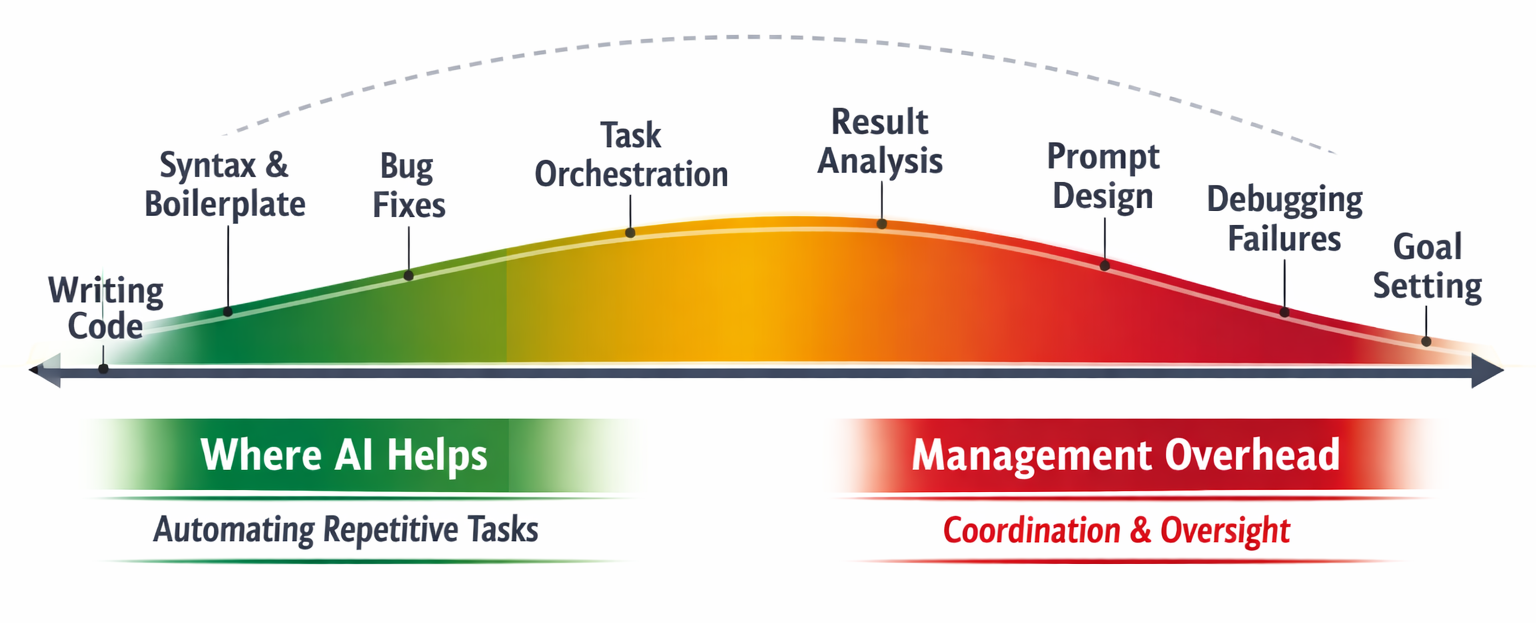

The answer is that AI generates boilerplate faster than you can type. But you still architect the solution, define the steps, verify correctness. You've become a manager of a fast but unreliable junior developer.

Some people like managing. Some would rather code.

Why This Happens

These aren't best practices for a stable tool. They're workarounds for a moving target.

People find something that works. For their brain. For their codebase. For their type of task. Then they generalise. But the underlying conditions differ.

A greenfield React app has different constraints than a legacy Django monolith. Someone who thinks verbally gets more from voice prompts than someone who thinks by typing. Repetitive migrations benefit from detailed instruction files. One-off features might not.

The model matters too. Opus behaves differently than Sonnet. Cursor has different affordances than Claude Code. What worked six months ago might be obsolete.

So you get confident, contradictory advice. Everyone is telling the truth about their experience. Their experience just doesn't transfer cleanly.

What This Tells Us

Maybe there are no best practices yet.

Best practices emerge when a tool is stable enough that patterns generalise. We have best practices for Git because Git behaves predictably. We have best practices for code review because the dynamics of human collaboration are understood.

AI coding tools aren't there. The models change every few months. The tooling changes faster. What worked in January might not work in June.

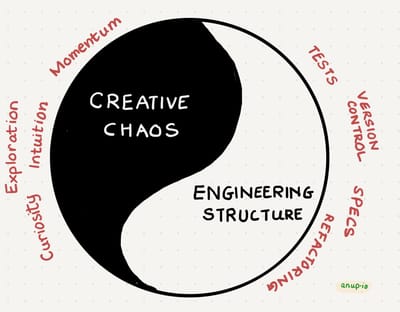

The meta-skill isn't "learn the best practices." The meta-skill is rapid experimentation. Try short prompts. Try long ones. Try planning mode. Skip it. Reset context. Preserve it. Notice what works for you, on this task, with this model, this week.

And know when to abandon an approach. If the model isn't getting it after a few attempts, don't keep pushing. Revert. Start fresh. Try something else. The sunk cost fallacy hits hard when you've spent 20 minutes crafting prompts.

The Gap

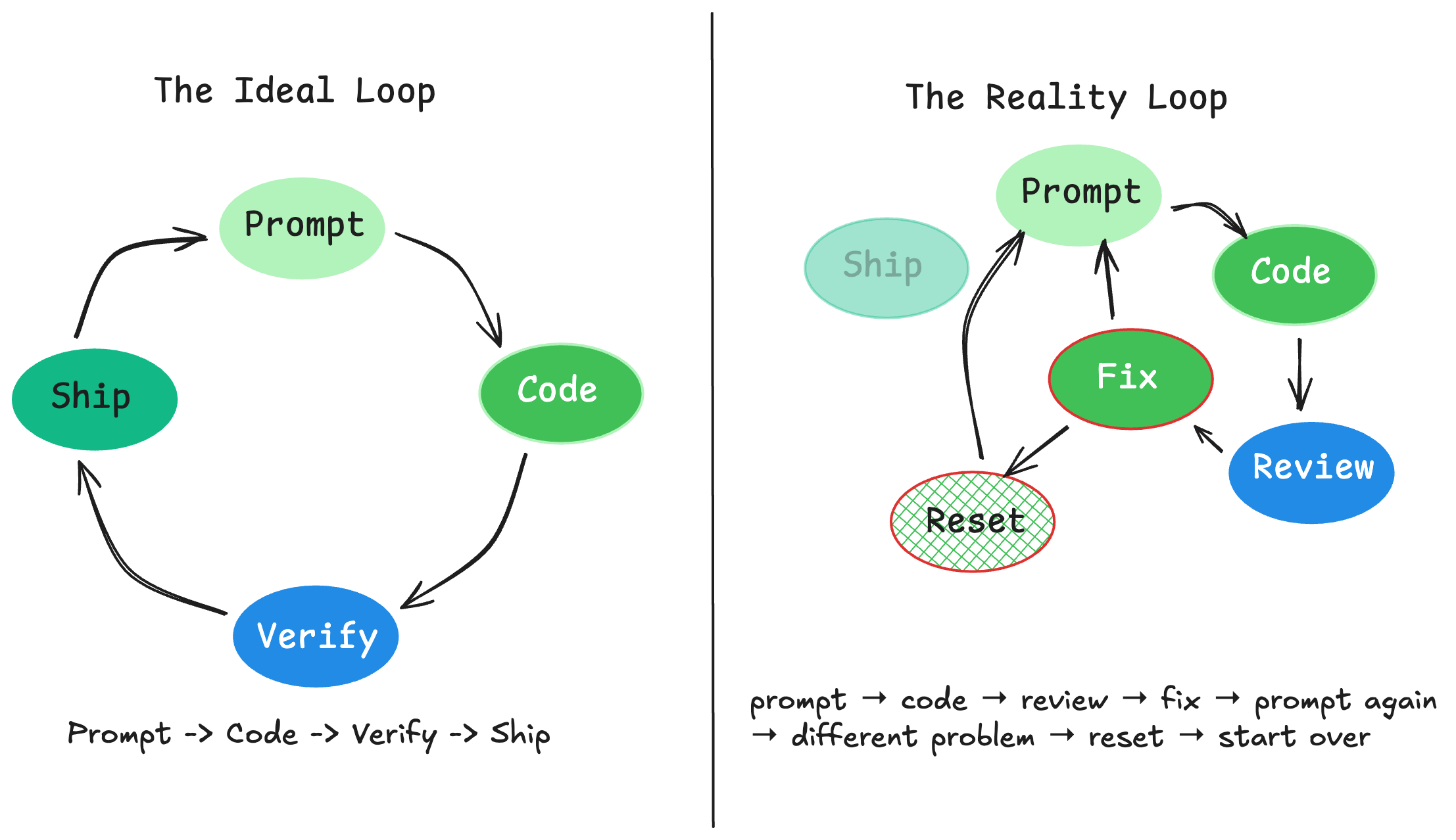

There's a gap between how AI coding is marketed and how it works in practice.

The pitch: 10x developer. Programming accessible to non-programmers.

The reality: experienced developers sharing elaborate workarounds, disagreeing about fundamentals, reviewing every line, acknowledging the code quality isn't quite there.

If you don't know what you're doing, AI fails with death by a thousand cuts. The people getting good results are the ones who'd be productive without AI. The tool gives them a speed boost on mechanical tasks. It's autocomplete on steroids, not a replacement for knowing what you're doing.

The honest answer to "how do I get AI code to 90% of what I'd write by hand" is: by being good enough to recognise the 10% gap and fix it yourself.

That's the part nobody's figured out how to skip.

No spam, no sharing to third party. Only you and me.

Member discussion