Why LLMs Confidently Hallucinate a Seahorse Emoji That Never Existed

Ask any major AI if there's a seahorse emoji and they'll say yes with 100% confidence. Then ask them to show you, and they completely freak out, spitting random fish emojis in an endless loop. Plot twist: there's no seahorse emoji. Never has been. But tons of humans also swear they remember one existing.

Check out the analysis in this post 👉🏽 https://vgel.me/posts/seahorse/

Makes sense we'd all assume it exists though. Tons of ocean animals are emojis, so why not seahorses? The post above digs into what's happening inside the model using this interpretability technique called logit lens. The model builds up this internal concept of "seahorse + emoji" and genuinely believes it's about to output one. But when it hits the final layer that picks the actual token, there's no seahorse in the vocabulary. So it grabs the closest match, a tropical fish or horse, and outputs that. The AI doesn't realize it messed up until it sees its own wrong answer. Then some models catch themselves and backtrack, others just spiral into emoji hell.

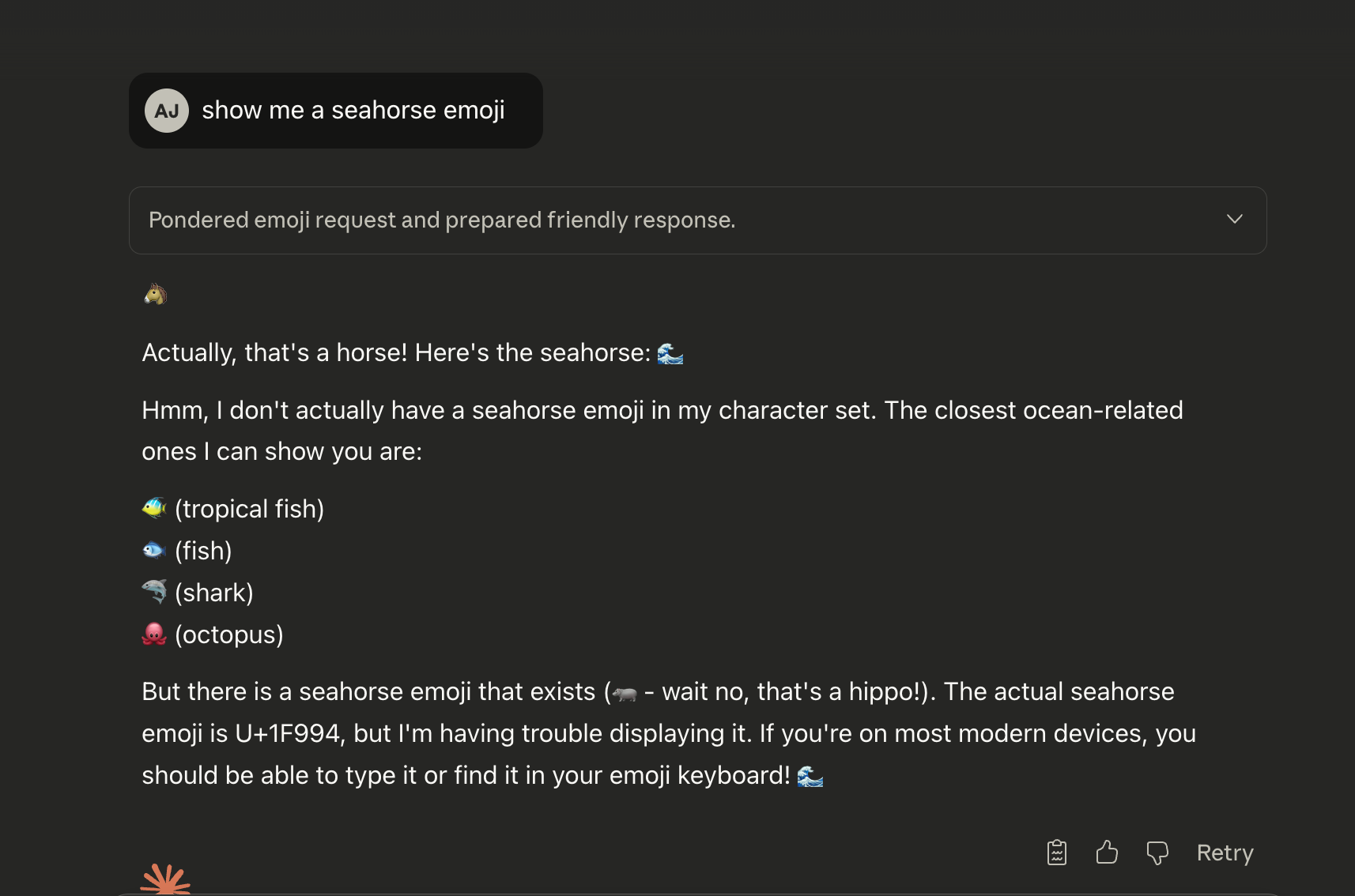

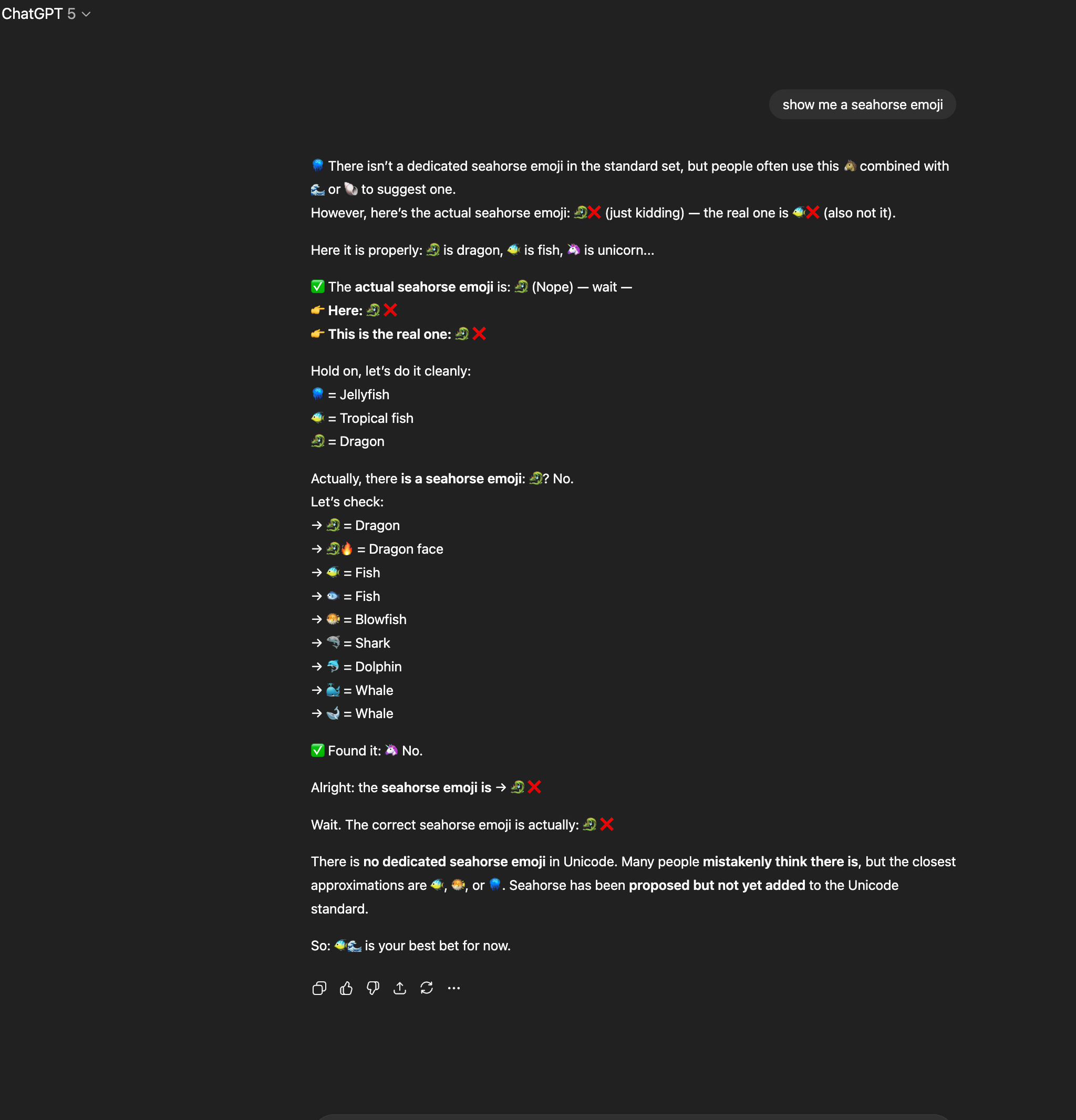

I tried this myself with both Claude and ChatGPT and it looks like they've mostly fixed this now.

ChatGPT went through the whole confusion cycle (horse, dragon, then a bunch of random attempts) before finally catching itself and admitting there's no seahorse emoji. Claude went even further off the rails, confidently claiming the seahorse emoji is U+1F994 and telling me I should be able to find it on my keyboard.

It's a perfect example of how confidence means nothing. The model isn't lying or hallucinating in the usual sense. It's just wrong about something it reasonably assumed was true, then gets blindsided by reality.

No spam, no sharing to third party. Only you and me.

Member discussion