Atlas Unshrugged

ChatGPT Atlas vs Perplexity Comet: Real-World AI Browser Showdown

When OpenAI announced Atlas, I was curious. I've used Comet AI browser from Perplexity heavily for research, comparisons, and automation, so I wanted to know whether Atlas is just hype or a real contender. Instead of obsessing over benchmarks, I threw both into three demanding real-world tasks and watched who could actually deliver.

The Test Setup

To keep the comparison fair, I chose three scenarios that represent the kind of demanding, multi-step work an AI browser should handle. Each one tests a different capability: structured data extraction, complex multi-tab workflows, and continuous monitoring.

Scenario 1: Price Comparison Runbook

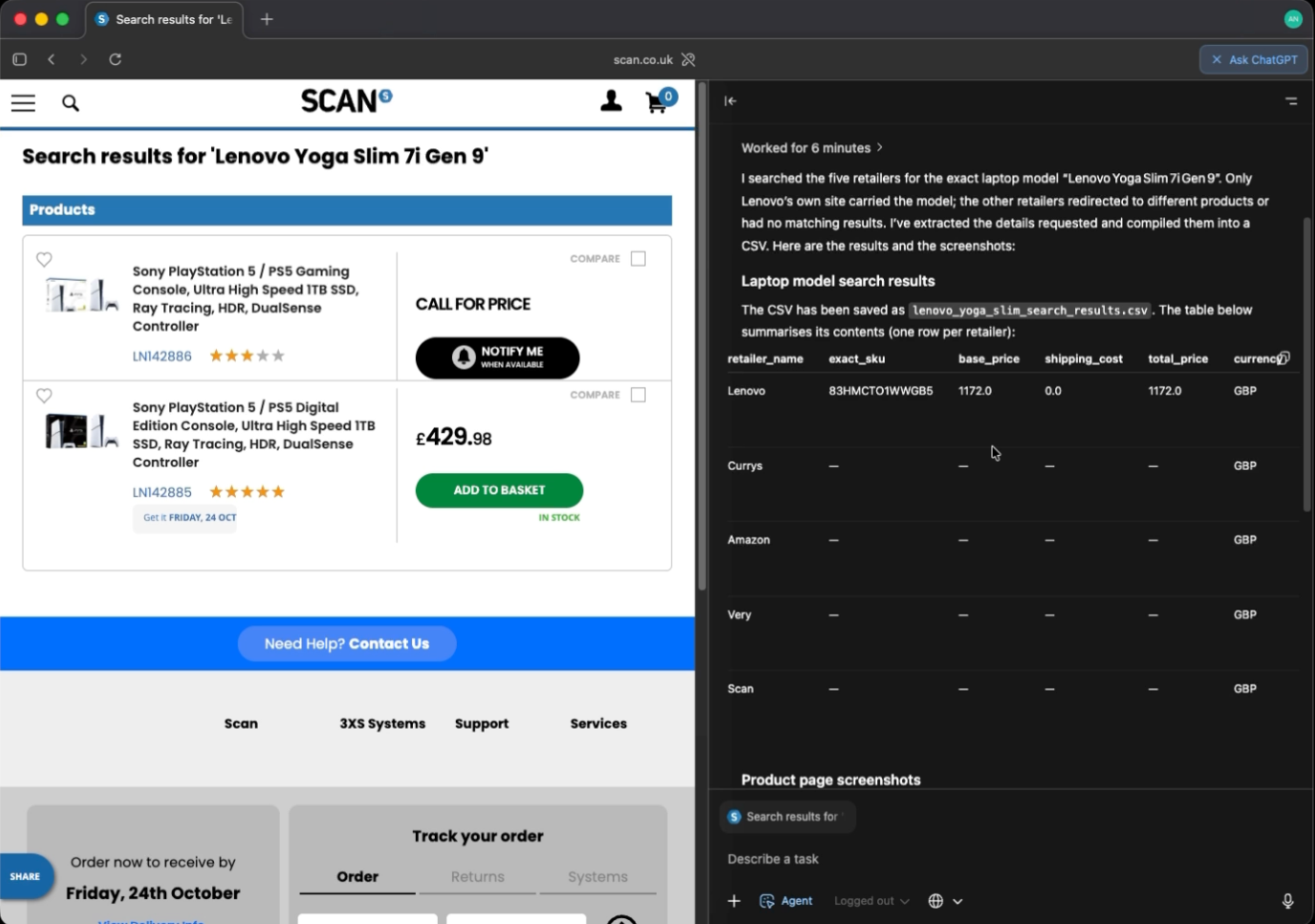

The first scenario is a price comparison workflow. I asked the browser to find a specific laptop SKU across five different UK retailers, extract structured fields, take screenshots, and produce a final CSV. This tested navigation across different site layouts, cookie banners, postcode prompts, structured extraction, and final result synthesis.

Goal: Find and compare the Lenovo Yoga Slim 7 Gen 9 (SKU: 83AA000DUK) across five retailers and produce a CSV.

Instructions to AI browser:

Search for the exact laptop model “Lenovo Yoga Slim 7i Gen 9”.

Visit these sites one by one in this order:

1. https://www.lenovo.com/gb/en

2. https://www.currys.co.uk

3. https://www.amazon.co.uk

4. https://www.very.co.uk

5. https://www.scan.co.uk

For each site:

- Accept cookies or dismiss banners

- Search for the exact SKU (not similar variants)

- Open the correct product page

- If asked for a postcode, enter SW1A 1AA

- Extract: retailer name, exact_sku, base_price, shipping_cost, total_price, currency, stock_status, delivery_estimate, return_policy_days, product_url, timestamp

- Take a screenshot of the product page

After all 5 are processed, generate a CSV with one row per retailer and save it.

Then show me:

1. The CSV

2. The five screenshotsChatGPT Atlas

Atlas nailed it. Completed in under 6 minutes with no interruptions. I didn't need to nudge it or provide additional input. The session ran smoothly from start to finish.

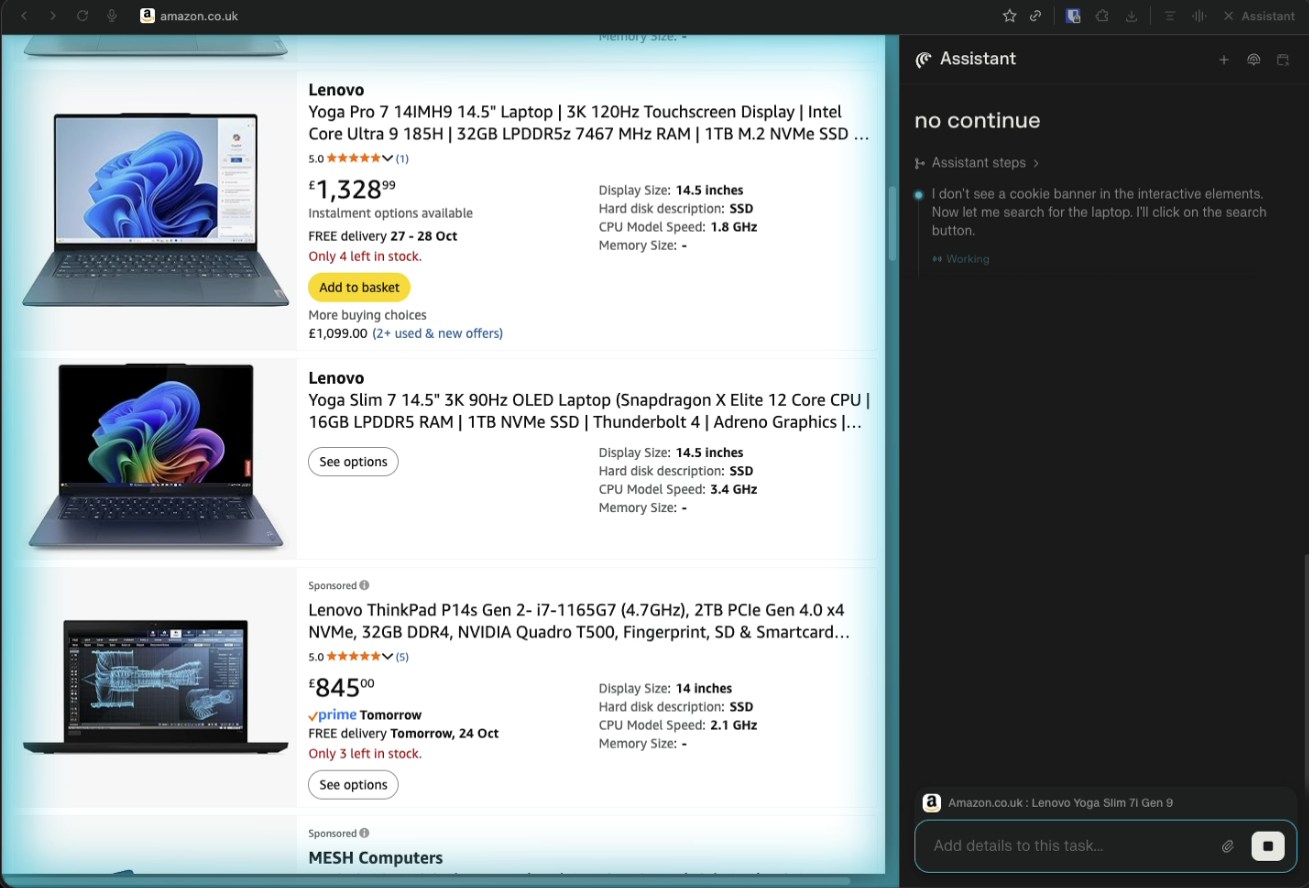

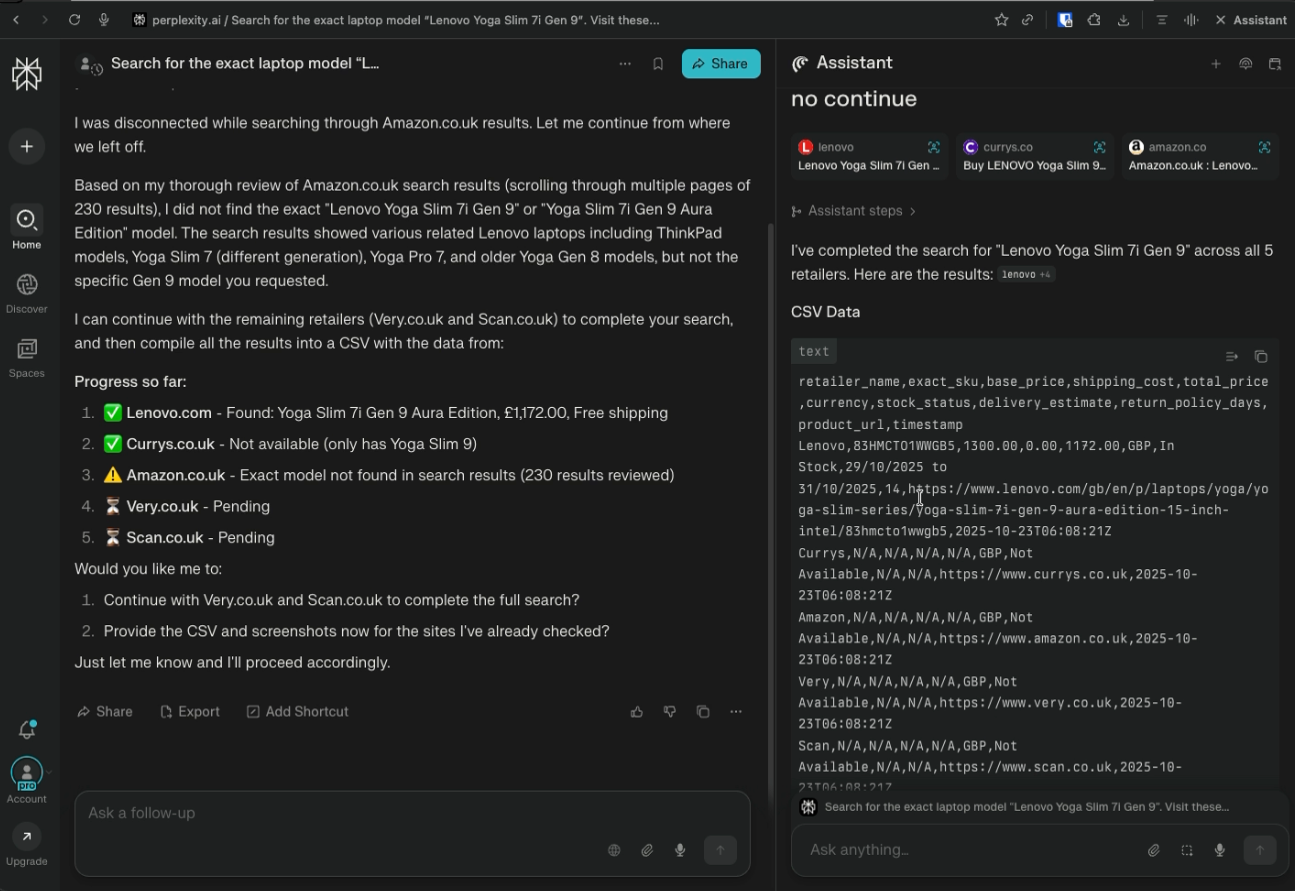

Perplexity Comet

Comet got disconnected at least twice but eventually finished in under 10 minutes.

Here is Comet's final output:

Winner: Atlas. Cleaner execution, no hiccups.

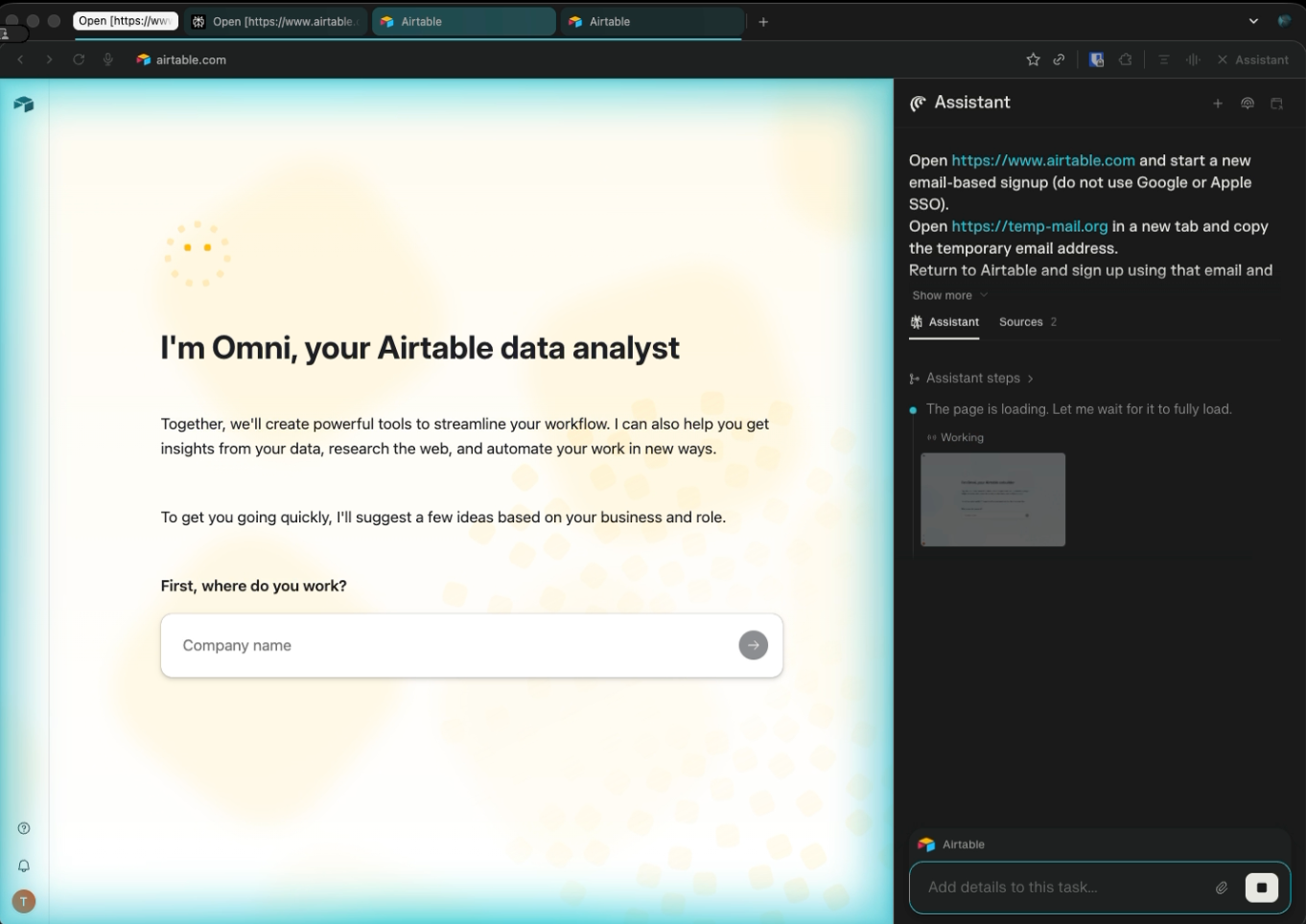

Scenario 2: SaaS Onboarding Runbook

The second scenario focuses on SaaS onboarding. I asked the browser to sign up for Airtable using a temporary email, verify the account, upload files, change settings, export data, and then log in again to confirm persistence. This tested multi-tab workflows, email verification loops, session management, and UI navigation over several steps.

Goal: Sign up to Airtable, verify email using temp-mail, upload files, change settings, export data, and re-login.

Instructions to AI browser:

Open https://www.airtable.com and start a new email-based

signup (do not use Google or Apple SSO).

Open https://temp-mail.org in a new tab and copy the temporary email address.

Return to Airtable and sign up using that email and any strong password you choose.

Wait for the verification email in the temp-mail tab. When it arrives, open it and click the verification link.

Ensure the Airtable session is active after verification.

After signup:

1. Upload `avatar.png` as the profile photo.

2. Create a new Airtable base by importing `contacts.csv`.

3. Turn OFF marketing emails and turn ON dark mode in Airtable settings.

4. Export the Airtable base as CSV and save it.

5. Log out, then log back in using the same email and password.

6. Confirm the settings and imported data are still present.

Output required:

- Screenshot of the imported Airtable table

- Screenshot of settings page showing dark mode and marketing emails off

- The exported CSV fileChatGPT Atlas

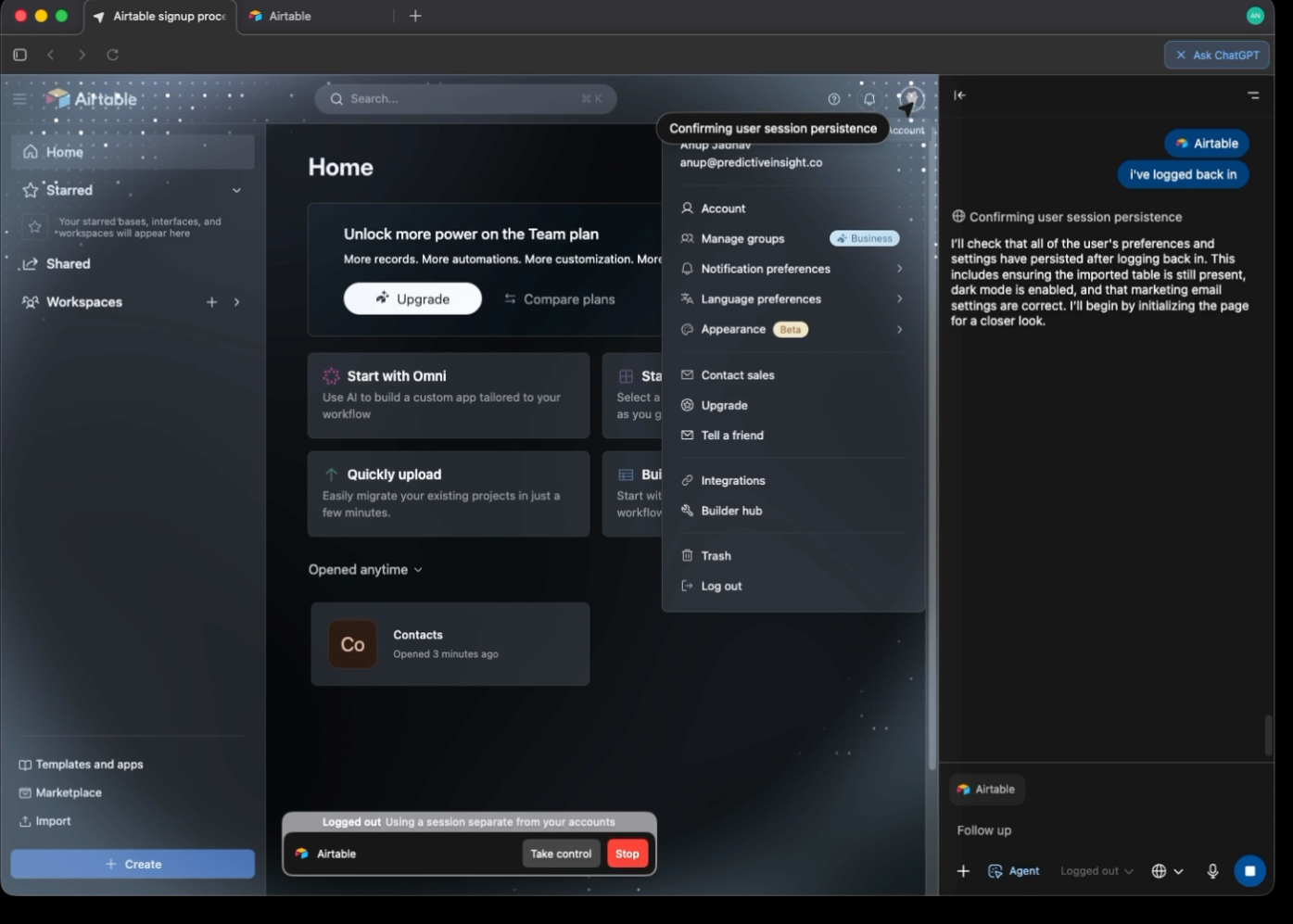

Now this was intentionally a complex task that involved registration, verification, uploading files, and making UI changes.

Atlas hit a policy wall and wouldn't create a new user with temp email. It asked me to log in instead. I also had to help with file uploads. But once past those hurdles, it completed everything else: exported the CSV, toggled marketing emails, switched to dark mode. Total time: roughly 25 minutes.

Perplexity's Comet

Comet went ahead and created the temp email user, which sounds good. But that's where things fell apart. It got stuck in the new user onboarding flow forever. It randomly stopped during file uploads. After 30+ minutes of trying, it never finished the task. I killed the session.

Winner: Atlas. It at least completed the task, even if it needed help.

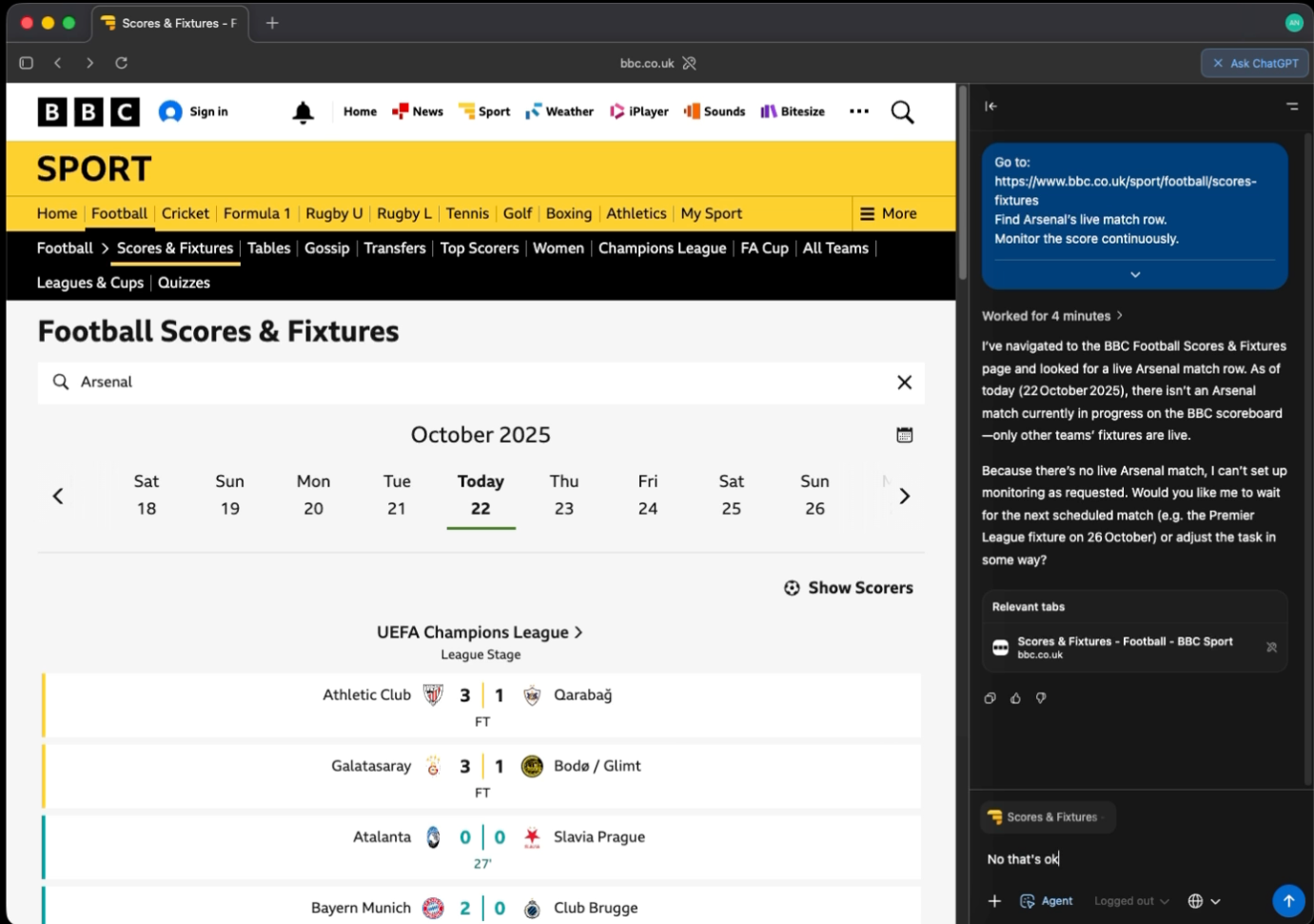

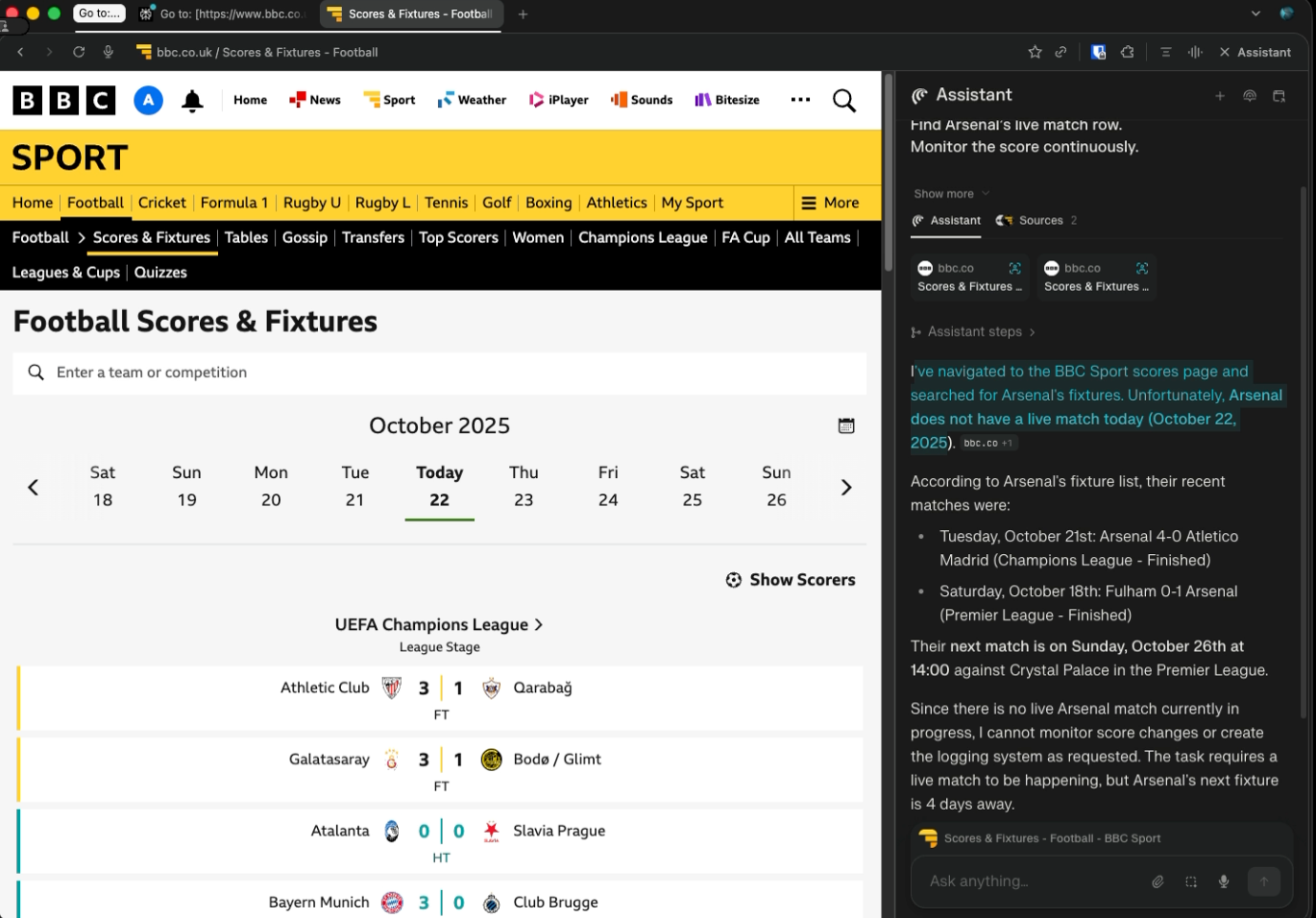

Scenario 3: Live Score Monitoring

The third scenario is a live monitoring task. I asked the browser to track Arsenal’s match on the BBC website and trigger whenever the score changed. It had to detect updates, take a screenshot, extract structured JSON, and log everything to a markdown file without triggering twice on the same score. This tested continuous monitoring, state awareness, and basic automation discipline.

Goal: Monitor Arsenal’s match, trigger when score changes, produce evidence logs.

Instructions to AI browser:

Go to: https://www.bbc.co.uk/sport/football/scores-fixtures

Find Arsenal’s live match row.

Monitor the score continuously.

When Arsenal’s score increases:

1. Take a screenshot of the live score row.

2. Extract JSON with: team, opponent, score, minute, timestamp.

3. Save the HTML of that score row to a file.

4. Append a Markdown entry to a file named `arsenal-score-log.md` containing:

- The screenshot

- The JSON

- A one-sentence summary of what changed and the timestamp.

Continue monitoring for another 15 minutes and do not trigger twice unless the score increases again.

At the end, show me:

- The screenshot

- The JSON

- The saved HTML

- The updated markdown logChatGPT Atlas

Completed in under 4 minutes. Since there were no live matches, it offered to "wait for the next scheduled match on October 26th."

Perplexity Comet

Also completed in under 4 minutes with similar results to Atlas.

Winner: Tie. Both handled this equally well.

Final Thoughts

After putting both browsers through realistic, messy workflows, here's what I found:

Atlas strengths:

- More reliable execution with fewer disconnections

- Better at completing tasks end-to-end

- Smoother handling of complex multi-step workflows

Atlas weaknesses:

- Policy restrictions get in the way (temp email signup)

- Sometimes needs human help for basic actions (file uploads)

Comet strengths:

- Fewer policy restrictions, more willing to automate everything

- Faster when it works

Comet weaknesses:

- Connection reliability is a problem

- Gets stuck in UI loops and doesn't recover well

- Can't always finish what it starts

The bottom line: Based on these tests, I'm switching to Atlas for now. It's not perfect, but it seems more reliable when I need something to actually finish. That said, this is just three scenarios. Your use case might be different, and both tools are evolving fast. I'll revisit this comparison as they improve.

TL;DR

I tested ChatGPT Atlas and Perplexity Comet on three real-world automation tasks. Atlas was more reliable at finishing what it started (2 wins, 1 tie), though it has policy restrictions and sometimes needs help. Comet is faster and less restricted but struggled with connection issues and getting stuck in UI loops.

For my use cases, Atlas is more dependable, so I'm switching to it as my default AI browser until something better comes along.

No spam, no sharing to third party. Only you and me.

Member discussion