Eliza Redux: A Real-Time Voice AI Crisis Support Agent

I built a crisis support voice AI Agent in roughly 90 minutes at a voice AI hackathon and won. Here's how I did it, and the tactics that made it possible.

The Challenge

Crisis hotlines have four main problems: limited 24/7 staffing, wait times during peak hours, geographic and language constraints, and scalability limits (one counselor per call).

Voice AI can fill these gaps. At a recent hackathon, I built Eliza* Redux: a real-time voice agent that provides crisis support through natural conversation, grounding techniques, safety assessments, and emergency service location.

The system went from concept to deployment in 90ish minutes using Layercode's voice infrastructure, Twilio's telephony API, and Cloudflare workers.

What It Does

The agent has five specialized tools it can trigger based on what the user says:

- 5-4-3-2-1 Grounding Technique: For panic, fear, or dissociation

- Breathing Exercises: Reduces anxiety through guided breathing

- Safety Assessment: Evaluates self-harm or suicidal intent

- Emergency Service Location: Uses OpenStreetMap to find nearby help

- Turn-by-Turn Directions: Provides navigation without overwhelming the user

Voice-Optimized Responses

The system prompt follows these principles:

- Short sentences (one idea per response)

- Natural pacing with pauses

- Conversational tone, not mechanical

- Acknowledge feelings before offering solutions

- Use plain language, avoid clinical terminology

- Prioritize safety and escalate when needed

Demo

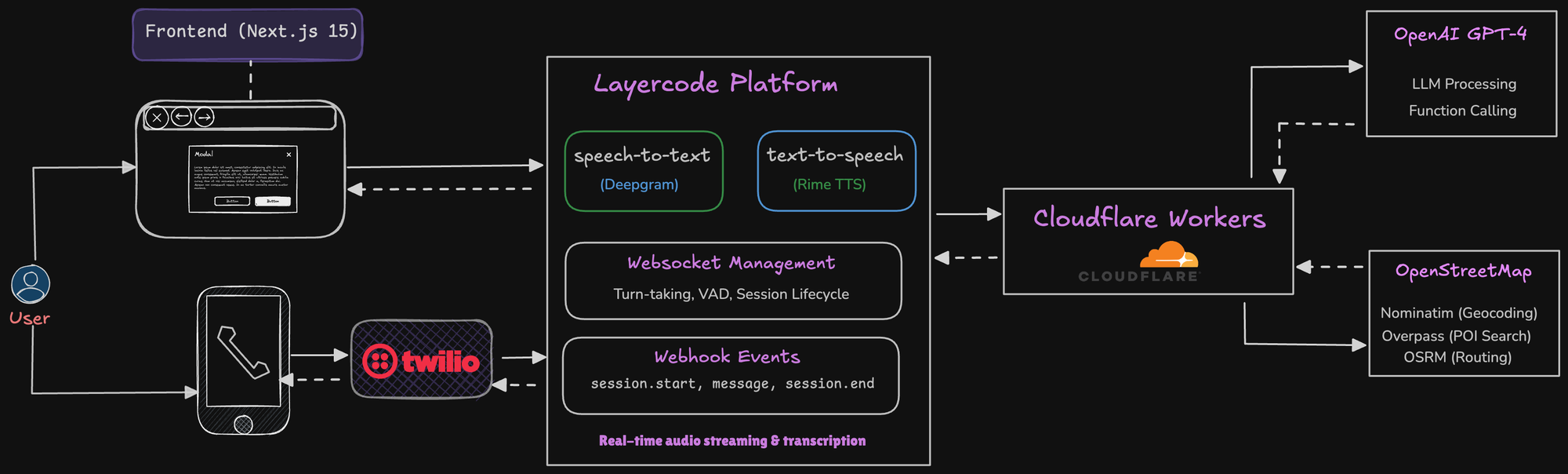

Tech Stack

I needed this to work fast, so I picked tools I already knew.

- Frontend: Next.js 15, React 19, Layercode React SDK

- Voice Processing: Layercode handles the heavy lifting here. They abstract away Deepgram for speech-to-text and Rime for text-to-speech. This means I didn't need to wire up multiple APIs myself.

- Telephony: Twilio connects everything to the phone system

- AI: OpenAI GPT-4 with function calling

- Maps: OpenStreetMap (Nominatim for geocoding, Overpass for location search, OSRM for routing)

- Deployment: Cloudflare Workers

The entire stack choice came down to speed. I'd used Twilio and Cloudflare before. Familiarity meant less time reading docs and more time building.

System Architecture

The architecture looks complex but Layercode abstracts away most of it. Let me break down how a conversation actually moves through the system:

The Flow:

- User speaks (phone or browser)

- Layercode captures audio and transcribes it

- Webhook sends transcription to

/api/agent - Backend calls GPT-4 with conversation context

- GPT-4 responds (potentially calling tools)

- Backend streams text response back to Layercode

- Layercode converts text to speech

- User hears the response

Response time from speech end to AI speech start: ~800ms.

What Broke (And How I Fixed It)

Voice Needs Different Design Patterns

Initial responses were written like chat messages. Long paragraphs work in text but feel robotic in voice.

Solution: Restructured all responses to use short sentences with one idea each. Added natural pauses.

Tool Overload

When providing directions, the agent would read all 12 steps at once.

Solution: Tools now return one or two steps at a time. The agent asks before continuing.

Handling Interruptions

Users need to interrupt the AI during crisis situations.

Solution: Implemented immediate stop on user speech in the Layercode platform. System tracks conversation state and can resume context if needed.

API Reliability

OpenStreetMap APIs (Overpass, OSRM) occasionally timeout.

Solution: Added fallback responses. In emergencies, default to recommending 999 or nearest hospital.

Three Things I Learned

After shipping this in 90 minutes, three lessons stood out:

- Voice requires different design patterns than text. Short responses, natural pauses, and conversational flow are essential. What works in chat doesn't work in speech.

- Tool calling needs careful UX design. Don't overwhelm users with information. Break complex tasks into steps. One direction at a time beats twelve at once.

- Build for failure gracefully. APIs will fail. Deployments will break. Have fallbacks that still help the user. In a crisis situation, a partial solution is better than a crashed system.

How to Ship Fast at Hackathons

These are the tactics that let me go from zero to demo in 90 minutes:

- Know your tools before you arrive

- I had an advantage because I'd used Twilio and Cloudflare before. Familiarity means less time reading docs and more time building. Pick a stack and get comfortable with it before the event. Read the sponsor APIs in advance.

- Show up with ideas

- Never walk in empty-handed. I had three concepts ready and picked the crisis chatbot because I'd talked to nonprofits about this problem before. Having options means you can adapt to what's realistic in the time you have.

- Get good with AI coding tools

- I've built 20+ apps with Claude Code. That experience taught me what works and what causes expensive mistakes. Pick one AI tool and learn its patterns. The time you save compounds fast. A 90-minute build becomes possible when you're not fighting with your tools.

- Scope ruthlessly

- Cut everything that doesn't directly prove your concept. I skipped user auth, databases, and analytics. Focus on the core demo. You can always add features later.

- Build for the demo, not production

- Hardcode values. Skip error handling for edge cases. Use mock data if APIs are slow. The goal is a working demo, not a finished product. You can clean it up after you win.

- Practice your pitch

- A great demo beats a great product. Spend the last 10-15 minutes practicing what you'll say. Know your opening line, your key points, and your closer. Technical judges want to see it work. Business judges want to understand the impact.

- Have a fallback plan

- APIs fail. Deployments break. Have a video recording of your demo working. Seriously. I've seen too many great projects crash during live demos.

Why This Matters

Crisis support can't wait for perfect technology. People need help now. Voice AI isn't a replacement for trained counselors, but it can bridge gaps in access and availability.

The technology exists today to build supportive systems that work at scale. The hard part isn't the AI; it's designing the experience to actually help people in their worst moments. That means thinking through interruptions, failures, and how information gets delivered when someone is panicking.

This hackathon project proved the concept works. It's a demo, not a product, but it shows what's possible with today's tools.

*The OG Eliza

Eliza was one of the first conversational computer programs in history, created in 1966 by Joseph Weizenbaum at MIT. It became famous for its DOCTOR script, which mimicked a Rogerian psychotherapist. Rather than giving advice, it reflected the user’s statements back at them, encouraging them to keep talking which is exactly how that therapeutic style works in the real world.

Weizenbaum wrote it as an experiment to show how superficial pattern-matching could simulate conversation. Six decades later, the technology is different but the need for supportive conversation remains.

Resources

UK Crisis Support:

- Samaritans: 116 123

- Shout: Text "SHOUT" to 85258

- Emergency: 999

No spam, no sharing to third party. Only you and me.

Member discussion