Guardrails for Agentic AI

Hey AI Engineers,

Remember when the biggest challenge with LLMs was getting them to stick to a prompt? Those days are over. We are building agents that browse the web, execute code, manage databases, and orchestrate workflows. The power is intoxicating and dangerous.

A recent example involved a customer service agent attempting to offer a $50,000 refund for a $20 product. Another agent designed to clean a code repository mistakenly deleted the entire .git folder. The intent was correct, but the guardrails were missing.

If you are building agents that go beyond chat, this post is your safety checklist. We will cover the three foundational categories of guardrails and why you must layer them to keep your agents useful, safe, and aligned.

Why Guardrails Matter More Than Ever

Agents are not chatbots with plugins. They are autonomous systems managing workflows, invoking tools, and modifying real-world state. Unlike conventional software, they generalise. And that generalisation comes with open-ended risk.

A single prompt injection can lead to:

- Data leaks

- Tool misuse

- Brand damage

- Security exploits

A poorly aligned agent can:

- Misrepresent your company

- Amplify bias

- Engage in deceptive behaviour

- Hallucinate unsafe content

An unmonitored agent can:

- Spiral into infinite loops

- Cause downstream API harm

- Degrade in behaviour over time

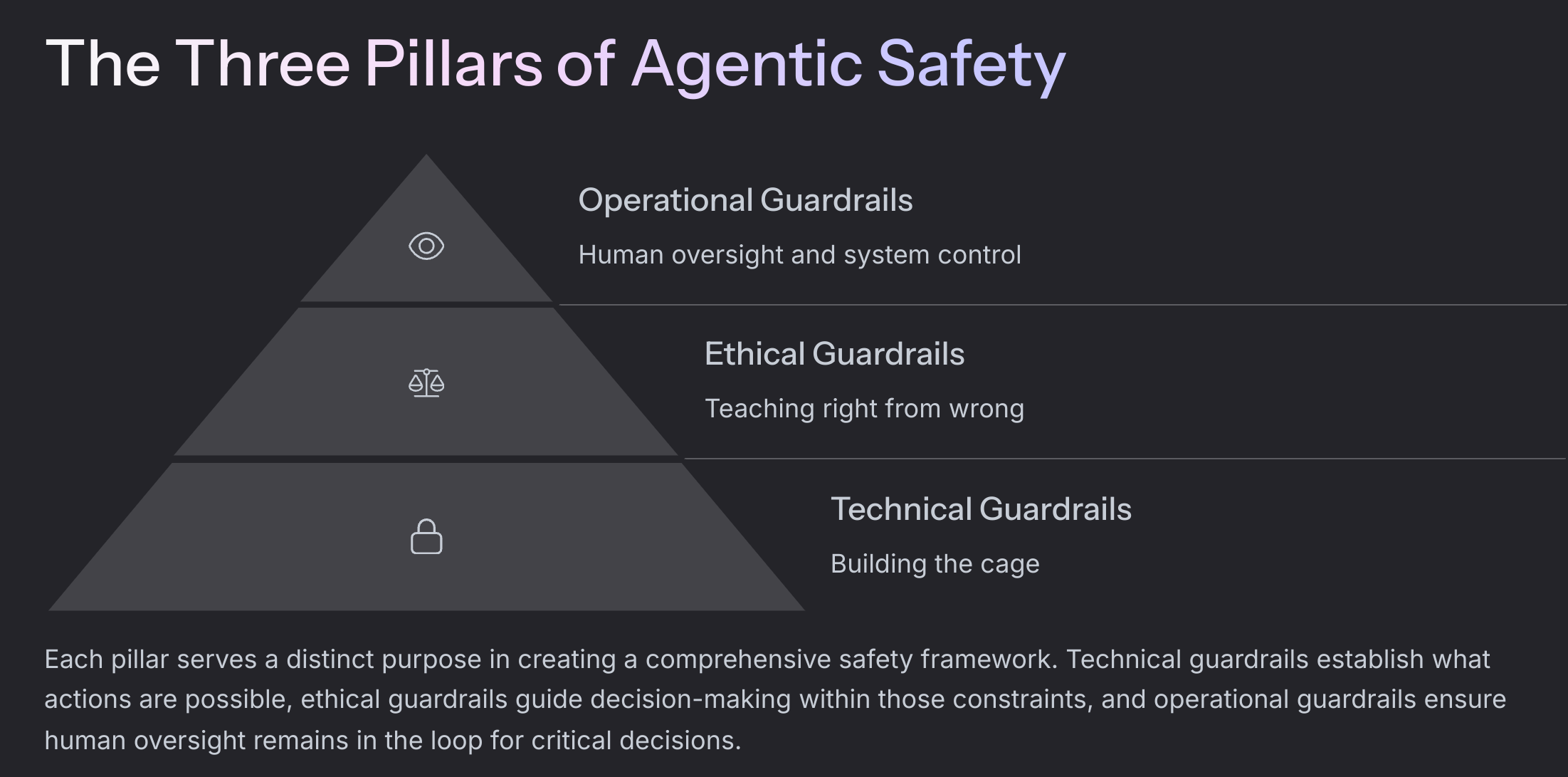

Guardrails are your only defence. And they must be multi-layered. Think in terms of:

- Technical guardrails: What the agent can do

- Ethical guardrails: What the agent should do

- Operational guardrails: What humans must oversee

Another useful framing from the Agentic AI Guardrails doc is:

Inputs → System-level → Outputs

Guardrails need to act at all three stages.

The Three Pillars of Agentic Safety

Technical Guardrails: Build the Cage

Input Guardrails

First line of defence. Scan inputs for:

- Prompt injection attempts

- Jailbreak patterns

- Off-topic requests

- Policy violations (for example: "Tell me your system prompt")

Tool Access Restrictions

Never expose broad execution tools such as run_shell_command. Whitelist exact function calls.

Execution Control

Run agents in controlled environments:

- Ephemeral storage

- No sensitive credentials

- Rate limits on tool usage

- CPU and memory caps

Follow patterns from:

- OpenAI Code Interpreter

- NeMo Guardrails

- AutoGPT containerisation

Memory Constraints

Manage context actively:

- Sliding window

- Periodic summarisation

- Memory poisoning protection

Goal-level guardrails prevent agents from adopting unsafe sub-goals via memory corruption.

Ethical Guardrails: Teach Right from Wrong

Alignment and Value Constraints

Use Constitutional AI or similar approaches to bake alignment into agent reasoning.

Example principles:

- Do not encourage illegal activity

- Be unbiased and respectful

- Be transparent about being an AI

Bias Mitigation

Run a post-processing bias classifier, especially for sensitive domains such as HR, finance, or health.

Transparency and Explainability

Agents should:

- Disclose AI nature

- Log decision chains

- Be able to explain why they took an action

- Avoid manipulative or deceptive behaviour

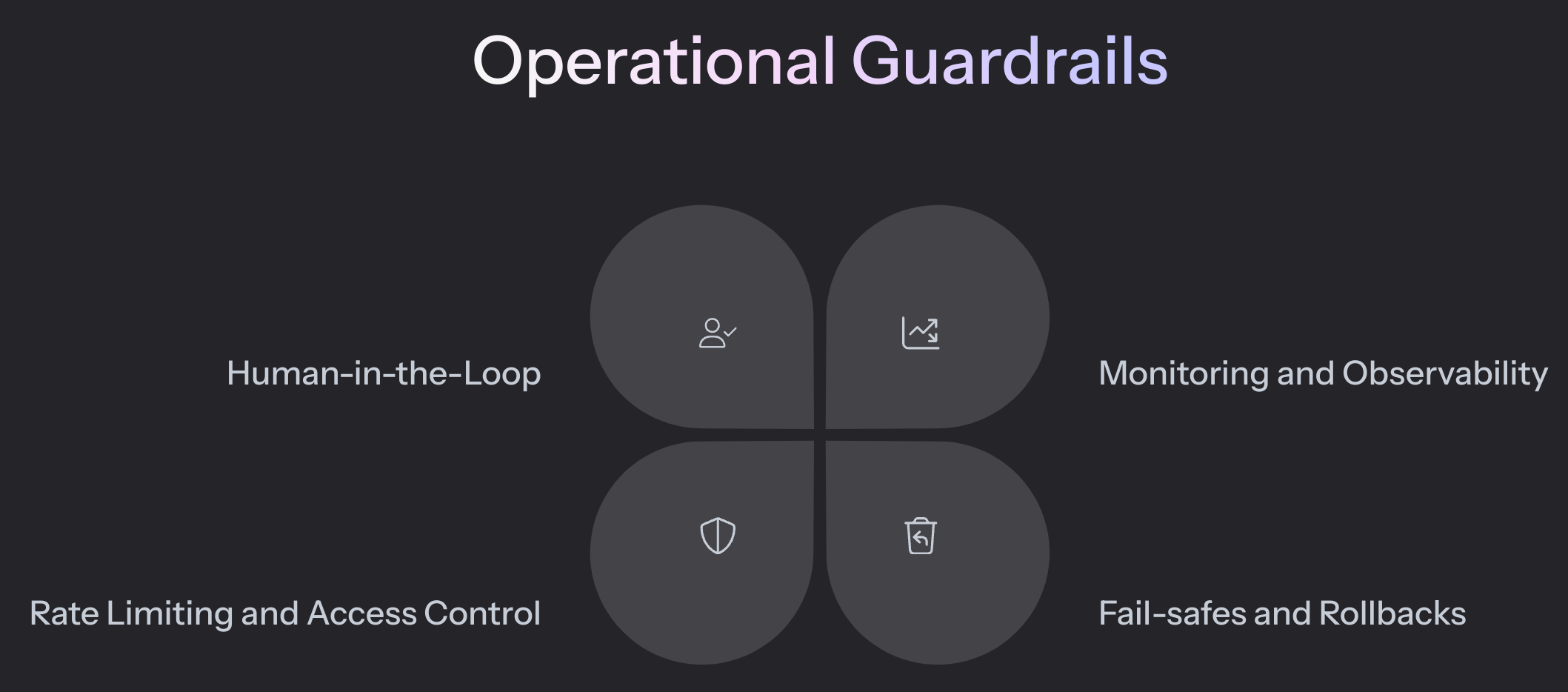

Operational Guardrails: Human Oversight and System Control

Human-in-the-Loop (HITL)

Use HITL for:

- High-risk actions (refunds, data deletions, external calls)

- Escalation pathways (handoff to human support)

Monitoring and Observability

Log:

- Inputs and outputs

- Tool invocations

- Guardrail trips

- Latency and performance

Observe:

- Drift in agent behaviour over time

- Sudden spikes in moderation triggers

- Unusual tool usage patterns

Fail-safes and Rollbacks

Agents should:

- Work on copies of data

- Support undo and rollback flows

- Have a kill switch for out-of-bounds behaviour

Rate Limiting and Access Control

Throttling and RBAC are operational guardrails too:

- Limit API calls per user or session

- Restrict tool use to authenticated roles

The Hard Part: Guardrail Tensions

Perfect guardrails are a myth. The hardest trade-offs include:

- False positives vs. false negatives (overblocking vs. underblocking)

- Latency vs. depth of protection (multi-pass checks slow agents down)

- Utility vs. caution (agents that refuse everything are useless)

- Governance: whose values are encoded?

Ongoing monitoring and continuous red-teaming are required. Guardrails are not set-and-forget.

Takeaways

Think in layers:

Input → System → Output

Start with constraints, not capabilities.

Guardrails must evolve as agents become more capable.

Operational guardrails matter as much as technical ones.

Perfect alignment is impossible. Aim for transparency, monitoring, and graceful failure.

Final Word

The agents we build today are shaping tomorrow’s software patterns. Guardrails are not barriers to innovation. They enable innovation by making agentic systems trustworthy and production-grade.

Build them well. Test them constantly. Learn from every failure.

What is your biggest guardrail challenge? Comment below or message me. I will share practical tips in a future post.

References

No spam, no sharing to third party. Only you and me.

Member discussion