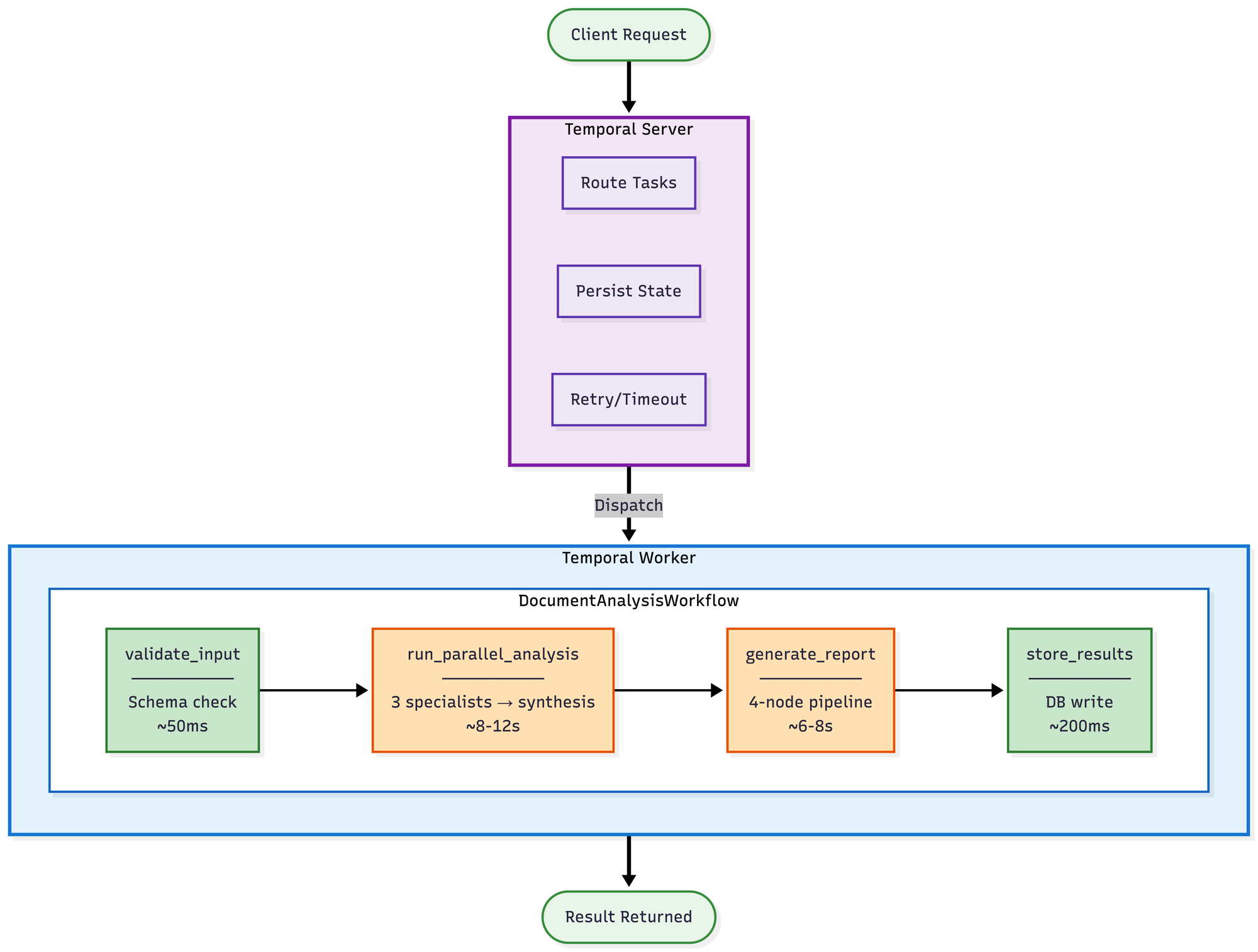

Temporal + LangGraph: A Two-Layer Architecture for Multi-Agent Coordination

Using Temporal and LangGraph for multi-agent systems in production solves retries, state persistence, and failures.

Multi-agent system works great in development. Then you deploy it. An LLM call times out halfway through. Your worker crashes. You restart and have no idea which agents already ran. The state is gone.

Most agent frameworks give you prompts, tool calling, and reasoning chains. They don't give you retries, state persistence, or visibility into production failures. You're supposed to figure that out yourself.

I've been running a multi-agent system in production that coordinates several specialist agents in parallel, synthesises their outputs, and needs to handle failures gracefully. After trying a few approaches, I landed on combining Temporal (workflow orchestration) with LangGraph (agent state machines). They solve different problems and fit together cleanly.

This post covers the patterns I found useful, using a document analysis system as the running example.

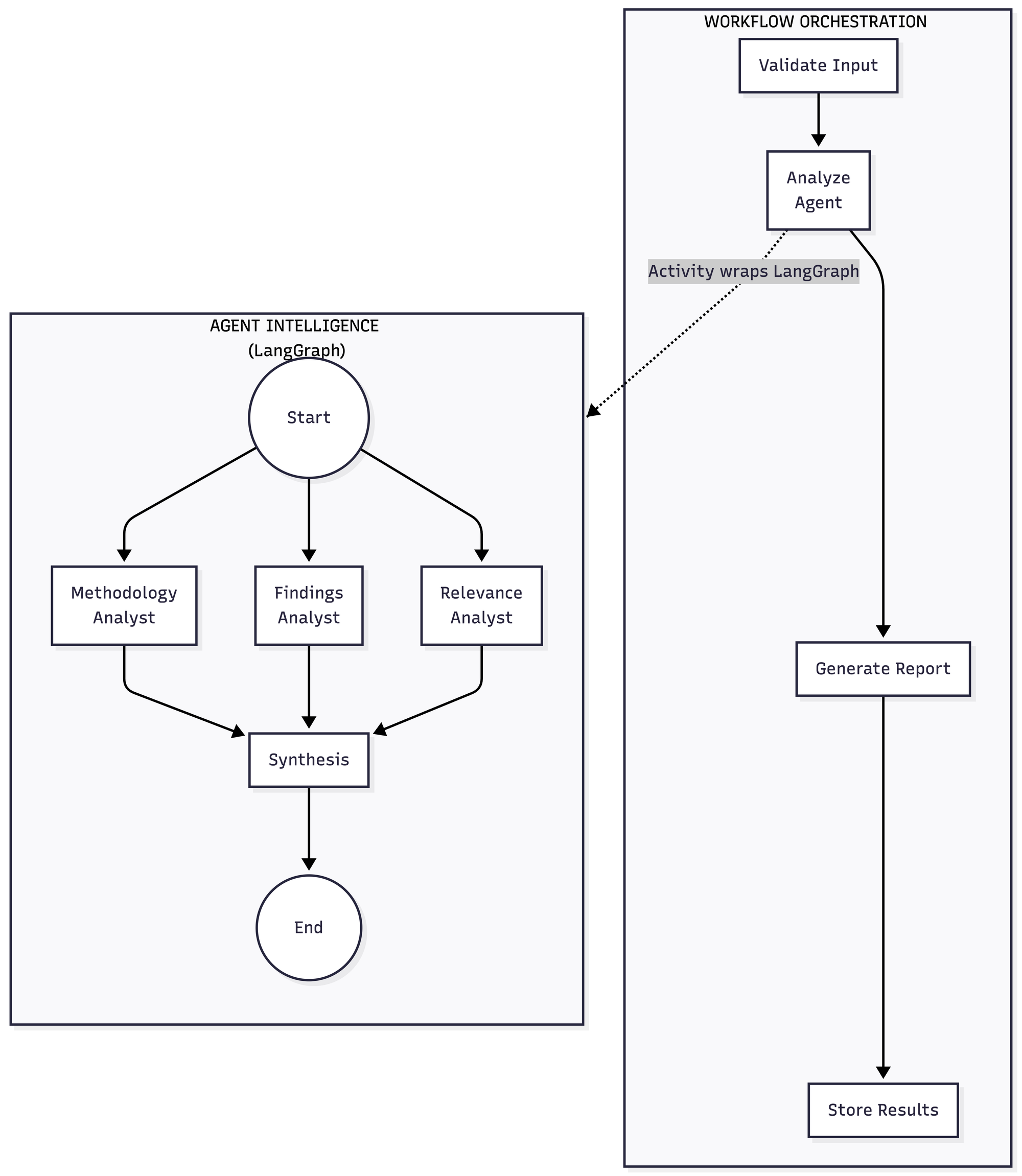

The Two-Layer Architecture

Keeping these layers separate turned out to be critical.

Temporal: The Orchestration Layer

Temporal is a workflow engine. You write workflows as code. Temporal handles persisting state between steps, retrying failed operations with backoff, enforcing timeouts, and letting you query what's happening. If your worker crashes mid-workflow, Temporal picks up where it left off.

Think of Temporal workflows as deterministic replay machines. That framing helped me understand them. They don't run your code directly. Instead, they record what happened and replay from any checkpoint. That's why they're good for orchestrating unreliable things like LLM calls.

LangGraph: The Agent Layer

LangGraph gives you state machines for agent logic. You define nodes (functions), edges (transitions), and a state schema. It handles running nodes in parallel when you want that. It's built around the idea that agents accumulate state as they work.

I use TypedDict for state schemas. You get autocomplete and it catches typos. More on that later.

Why Keep Them Separate?

Temporal answers "did this complete, and if not, what do we do about it?" LangGraph answers "given this state, what should the agent do next?" These are different questions.

I tried combining them early on. It got messy fast. Retry logic bled into agent logic. I couldn't tell where state lived. Separating them cleaned everything up.

But wait, doesn't LangGraph have its own durable execution now?

Yes. LangGraph 1.0 (released October 2025) includes built-in persistence and durable execution. So why use Temporal?

Temporal is battle-tested across thousands of production deployments for mission-critical workflows. It gives you superior observability through the Temporal Web UI, native support for workflows spanning days or weeks, and a proven track record handling infrastructure failures. LangGraph's durable execution is newer and purpose-built for AI agents, but Temporal's maturity matters when you need rock-solid reliability.

A Grid Dynamics case study validates this: they migrated from LangGraph-only to Temporal after finding that LangGraph's Redis-based state management created issues with lifecycle management and debugging. Temporal's event history made state persistence automatic and debugging straightforward.

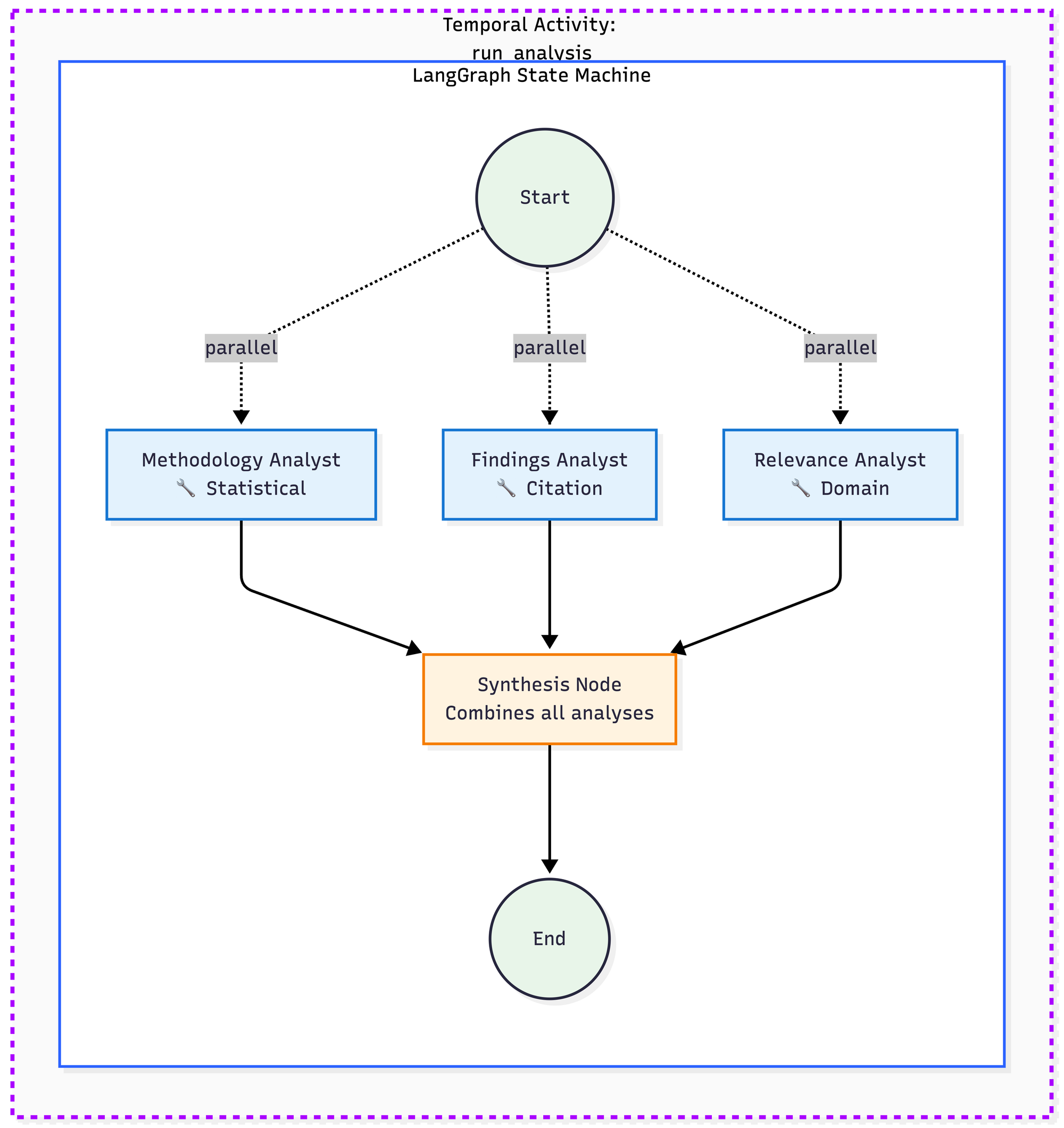

Pattern 1: Supervisor with Parallel Specialists

This is my most-used pattern. A supervisor kicks off several specialist agents in parallel, waits for all of them, then synthesises their outputs.

The specialists don't depend on each other. A methodology analyst doesn't need to know what the findings analyst found. They're examining different aspects of the same document. Running them in parallel cuts latency by roughly 3x compared to sequential execution.

The entire graph runs inside a single Temporal activity. If any specialist fails (LLM timeout, rate limit, whatever), I retry the whole analysis. I tried being clever about partial retries. It caused more problems than it solved. You'd end up with two analyses from run A and one from run B, and the synthesis would be inconsistent.

Each specialist gets its own tools. The methodology analyst has tools for statistical validation. The findings analyst can look up citations. Specialisation helps.

class AnalysisState(TypedDict):

document_content: str

methodology_analysis: Optional[str]

findings_analysis: Optional[str]

relevance_analysis: Optional[str]

final_assessment: Optional[Assessment] # Custom type for structured output

def create_analysis_graph() -> StateGraph:

graph = StateGraph(AnalysisState)

# Add specialist nodes

graph.add_node("methodology_analyst", methodology_node)

graph.add_node("findings_analyst", findings_node)

graph.add_node("relevance_analyst", relevance_node)

graph.add_node("synthesis", synthesis_node)

# Start all specialists in parallel from START

graph.add_edge(START, "methodology_analyst")

graph.add_edge(START, "findings_analyst")

graph.add_edge(START, "relevance_analyst")

# Converge at synthesis

graph.add_edge("methodology_analyst", "synthesis")

graph.add_edge("findings_analyst", "synthesis")

graph.add_edge("relevance_analyst", "synthesis")

graph.add_edge("synthesis", END)

return graph.compile()Pattern 2: Sequential Pipelines (Deterministic + LLM Nodes)

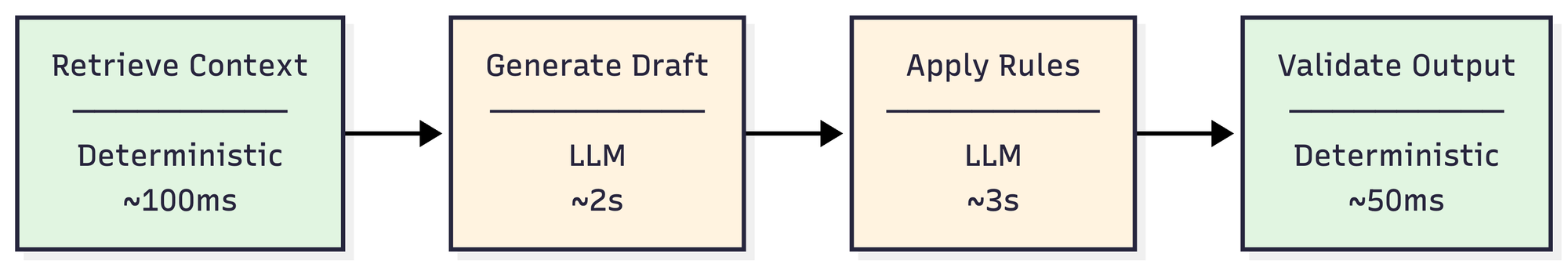

Not everything needs parallelism. Sometimes you have a pipeline where each step depends on the previous one. The key insight is that not every node needs to be an LLM call.

I mix deterministic nodes (database lookups, schema validation, rule application) with LLM nodes. The deterministic ones are fast, cheap, and predictable. They either work or throw a clear error. LLM outputs can be malformed, off-topic, or just weird.

Separating them makes debugging easier. When something breaks, you immediately know if it's the LLM being flaky or your retrieval logic having a bug.

def create_report_pipeline() -> StateGraph:

graph = StateGraph(ReportState)

graph.add_node("retrieve_context", retrieve_context_node) # Deterministic

graph.add_node("generate_draft", generate_draft_node) # LLM

graph.add_node("apply_rules", apply_rules_node) # LLM

graph.add_node("validate_output", validate_output_node) # Deterministic

graph.set_entry_point("retrieve_context")

graph.add_edge("retrieve_context", "generate_draft")

graph.add_edge("generate_draft", "apply_rules")

graph.add_edge("apply_rules", "validate_output")

graph.add_edge("validate_output", END)

return graph.compile()I've found 3-4 nodes is usually the right size. Fewer than that, you don't get much from the graph structure. More, and the state management starts feeling unwieldy.

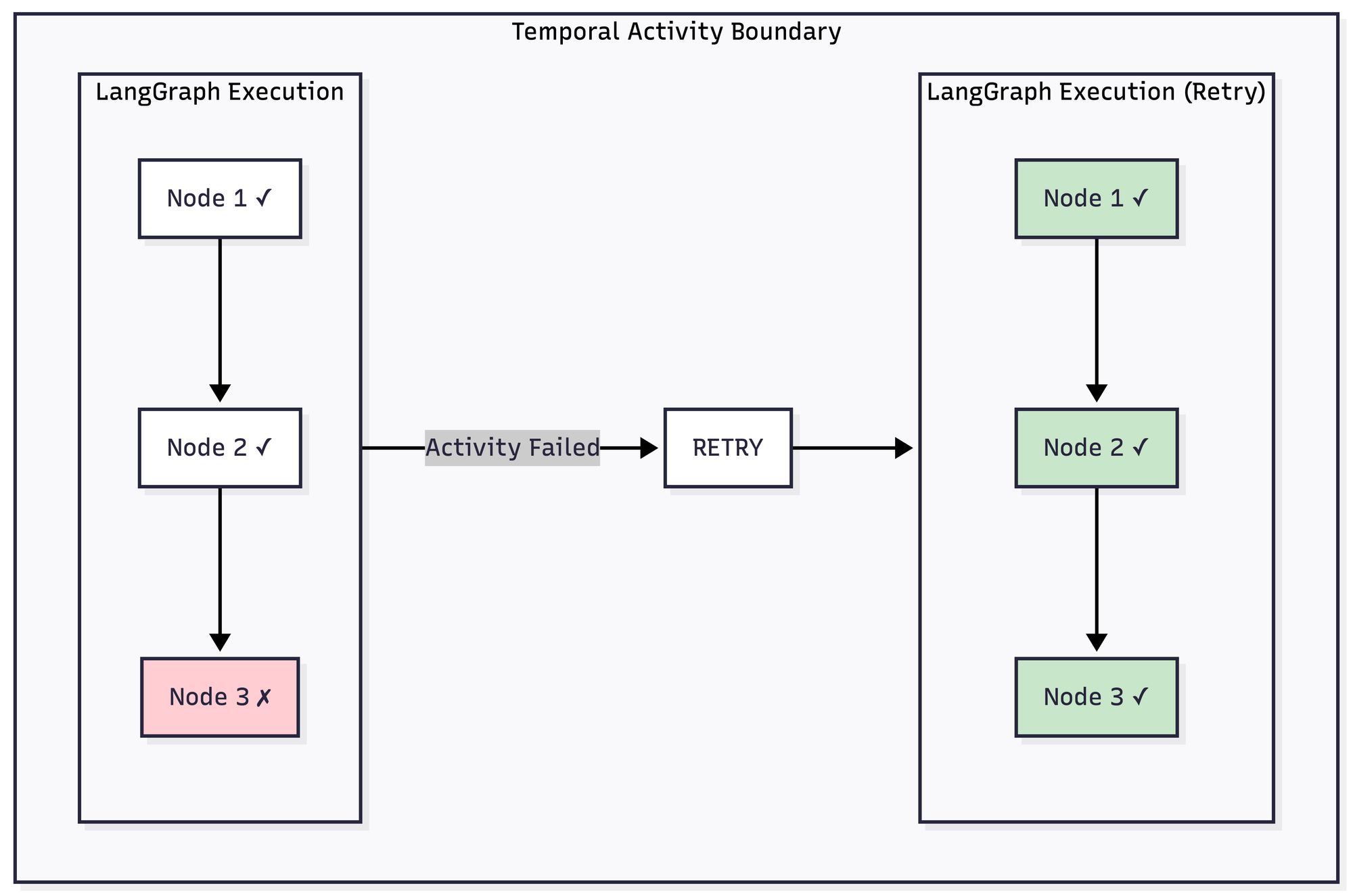

Pattern 3: Activities as Atomic Retry Units

This one took me a while to figure out. Where do you draw the retry boundary?

I wrap each LangGraph execution in a single Temporal activity. If any node fails, the whole thing retries from the beginning.

Seems wasteful, right? Why re-run Node 1 and Node 2 if they already worked?

Three reasons. First, the outputs are interdependent. The synthesis node needs all the analyst outputs from the same run to produce coherent results. Mixing outputs from different runs gives you inconsistent results.

Second, LLM calls aren't deterministic. If you retry from the middle, the LLM might generate something different that doesn't fit with what came before.

Third, it's simpler to reason about. One activity equals one complete analysis. It either works or it doesn't.

@activity.defn(name="run_analysis")

async def run_analysis(document: Document) -> AnalysisResult:

activity.heartbeat("Starting analysis") # Signal we're alive

graph = create_analysis_graph()

initial_state = {

"document_content": document.content,

"methodology_analysis": None,

"findings_analysis": None,

"relevance_analysis": None,

"final_assessment": None,

}

final_state = await graph.ainvoke(initial_state)

return final_state["final_assessment"]

# In the workflow

result = await workflow.execute_activity(

run_analysis,

document,

start_to_close_timeout=timedelta(seconds=120),

heartbeat_timeout=timedelta(seconds=30), # Detect stuck activities faster

retry_policy=RetryPolicy(

maximum_attempts=3,

initial_interval=timedelta(seconds=1),

backoff_coefficient=2.0,

non_retryable_error_types=["ValidationError"],

),

)The 120-second timeout might seem long, but multi-agent analysis with several LLM calls takes time. I use exponential backoff because rate limits are the most common failure mode. Hammering the API doesn't help.

The heartbeat_timeout is important for long-running activities. If the activity doesn't call activity.heartbeat() within 30 seconds, Temporal assumes it's stuck and can retry on another worker. This catches cases where your worker hangs but doesn't crash.

A note on idempotency: Temporal emphasises designing idempotent activities since they may run multiple times. With LLM calls, idempotency isn't about getting identical outputs (that's impossible). It's about not causing duplicate side effects. If your agent sends emails or writes to databases, make sure those operations use idempotency keys or check-before-write patterns.

Pattern 4: State Management with TypedDict

LangGraph uses TypedDict for state. I mark fields as Optional when they don't exist yet (before the node that produces them has run).

class AnalysisState(TypedDict):

# Always present from the start

document_id: str

document_content: str

# Populated by analyst nodes

methodology_analysis: Optional[str]

findings_analysis: Optional[str]

relevance_analysis: Optional[str]

# Populated by synthesis

final_assessment: Optional[Assessment]Why TypedDict instead of Pydantic? Pydantic validates on initialisation and assignment, adding overhead when state is being frequently updated and passed between nodes. For state that's being passed between nodes and accumulated, that's overhead you don't need. Validate at the boundaries (input and output), not in between.

Each node returns just the fields it's updating:

async def methodology_node(state: AnalysisState) -> dict:

analysis = await analyze_methodology(state["document_content"])

return {"methodology_analysis": analysis}LangGraph merges that back into the full state. You don't need to copy the whole dict and modify it.

How It All Fits Together

Here's the complete flow:

The worker registers workflows and activities with Temporal. You can run multiple workers for horizontal scaling. Temporal handles distributing work across them.

When Not to Use This

This architecture adds complexity. Don't use it if:

- You have a single agent doing a straightforward task. Just call the LLM directly. Add retries if you need them. Don't build a workflow engine for a single API call.

- Your agents need to make decisions based on previous agent outputs in real-time. This setup assumes you can define the coordination pattern upfront. If you need dynamic routing based on what agents discover, you want a different architecture (possibly hierarchical agents or a message bus).

- You're prototyping and iterating fast. Get your agent logic working first. Add Temporal when you actually need the reliability guarantees.

- Your team doesn't want to run Temporal. It's another service to maintain. If that's a non-starter, there are simpler options (durable task frameworks, even just good retry libraries).

TL;DR Version

- Keep orchestration and agent logic separate. Temporal handles "did it complete and what do we do if not." LangGraph handles "what should the agent do next."

- Run each LangGraph graph inside a Temporal activity. Partial results from failed runs cause more problems than re-running successful nodes.

- Run specialists in parallel when they're independent. Converge at a synthesis node.

- Mix deterministic and LLM nodes. Not everything needs to be an LLM call. Deterministic nodes are faster, cheaper, and easier to debug.

- Use TypedDict with Optional fields. Explicit state schema. Validate at boundaries.

- Add heartbeats for long-running activities. Helps Temporal detect stuck workers.

This setup has worked well for me in production. Your mileage will vary depending on what you're building, but hopefully some of these patterns save you the trial and error I went through.

No spam, no sharing to third party. Only you and me.

Member discussion