Why Your AI Agents Keep Breaking (And How Systems Thinking Can Fix Them)

If you've built AI agents that work perfectly in testing but fall apart in production, you're not alone. Most of us approach AI development the same way we build traditional software, piece by piece, component by component. But here's the thing: AI agents aren't just fancy programs. They're complex adaptive systems that learn, evolve, and interact in ways we can't always predict.

I've seen too many projects fail because teams focused on perfecting individual components instead of understanding how everything fits together. The solution isn't better algorithms or more data. It's thinking about your AI agent as part of a larger ecosystem.

The Problem with Linear Thinking

Most AI development follows a predictable path: collect data, train model, deploy, hope for the best. This works great for static systems, but AI agents are different beasts entirely. They:

- Learn and adapt continuously

- Interact with users, other systems, and changing environments

- Generate emergent behaviors you never programmed

- Create feedback loops that can amplify both successes and failures

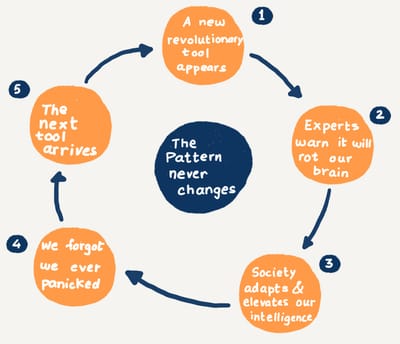

Think about it from first principles. Traditional software does what you tell it to do. AI agents make decisions based on patterns they've learned, and those decisions influence future inputs, which influence future decisions. It's a dynamic system, not a static one.

What Systems Thinking Actually Means for AI

Systems thinking isn't just academic theory it's a practical way to understand how your AI agent really works in the wild. Instead of asking "How do I make this component better?", you ask "How does this component interact with everything else?"

Here are the key shifts in how you think about your AI systems:

Focus on Relationships, Not Just Components

Your agent's memory module doesn't exist in isolation it feeds the planning module, which influences the action layer, which generates feedback for the learning system. A "bug" in one area might actually be a symptom of poor interaction between components.

Embrace Feedback Loops

Every AI agent creates multiple feedback loops. User interactions influence training data. Model outputs affect user behavior. Performance metrics drive algorithmic changes. Map these loops explicitly they're features, not bugs.

Plan for Emergence

Your AI will do things you didn't program it to do. This isn't a failure it's emergence. The question is whether you're designing systems that can handle both positive and negative emergent behaviors.

Practical Tools That Actually Work

Let me share some concrete approaches that have saved projects from disaster:

Causal Loop Diagrams

Before you write a single line of code, map out how different variables in your system influence each other. For example:

Positive reinforcing loop: Better agent performance → Higher user trust → More usage → More feedback data → Better agent performance

Negative balancing loop: More autonomy → Higher risk of errors → More human intervention → Less autonomy

Draw these relationships with arrows and polarities. You'll spot potential problems before they happen.

The RICE Framework

Every AI agent should be designed with four principles in mind:

- Robustness: Can it handle unexpected inputs and adversarial attacks?

- Interpretability: Can humans understand why it made specific decisions?

- Controllability: Can humans intervene when things go wrong?

- Ethicality: Does it align with human values and avoid harmful biases?

These aren't separate checklists they're interconnected. Poor interpretability makes controllability harder. Lack of robustness can lead to ethical problems.

Modular Design with Clear Boundaries

Build your agent as a system of specialized modules that can be tested, debugged, and replaced independently. Each module should have:

- A single, clear responsibility

- Well-defined inputs and outputs

- Explicit interfaces with other modules

- Independent monitoring and logging

This isn't just good software engineering it's essential for understanding emergent system behavior.

Learning from Real Failures

Some of the biggest AI failures happened because teams ignored systems thinking:

Microsoft Tay became offensive because it lacked proper boundaries between its learning system and its output system. The feedback loop between user input and model updates had no safeguards.

IBM Watson for Oncology failed because it was trained on synthetic data without proper integration into real medical workflows. The system worked in isolation but broke down when it encountered the complexity of actual healthcare systems.

Tesla Autopilot incidents often stem from misaligned expectations between the AI system's capabilities and human understanding a classic human-AI interaction design problem.

Each of these failures could have been prevented by thinking about the AI as part of a larger system rather than an isolated component.

Building Better AI Agents

Here's how to apply systems thinking to your next AI project:

Start with the Ecosystem

Before designing your agent, map out everything it will interact with: users, other systems, data sources, external APIs. Your agent's success depends on how well it fits into this ecosystem.

Design for Continuous Learning

Build feedback mechanisms into every component. User ratings, automated evaluations, performance metrics, error logs all of these should feed back into your system's learning process.

Plan for Failure Modes

Use techniques like Failure Mode and Effects Analysis (FMEA) to identify how your system might break. Focus especially on:

- Bias propagation through feedback loops

- Coordination failures in multi-agent systems

- Security vulnerabilities that exploit system interactions

- Emergent behaviors that violate ethical constraints

Monitor Everything

Implement comprehensive logging and monitoring that captures not just individual component performance, but system-level behaviors. Look for patterns that emerge from component interactions.

The Future Is Systemic

The AI agents that will succeed in the real world won't be the ones with the best individual components they'll be the ones designed as coherent systems that can adapt, learn, and evolve responsibly.

This means we need to start thinking like systems engineers, not just machine learning engineers. We need to understand feedback loops, plan for emergence, and design for the messy complexity of real-world deployment.

The tools and frameworks exist. The question is whether we're willing to shift our thinking from optimizing parts to optimizing wholes.

Your AI agents are already complex adaptive systems. The question is whether you're designing them that way.

What's your experience with emergent behaviors in AI systems? Have you seen examples where systems thinking could have prevented failures? I'd love to hear your stories and discuss practical approaches in the comments.

No spam, no sharing to third party. Only you and me.

Member discussion